Adobe Photoshop has been the center of attention for years, primarily because of its amazing editing features. It has a popular stock library, so you can be as creative as you want.

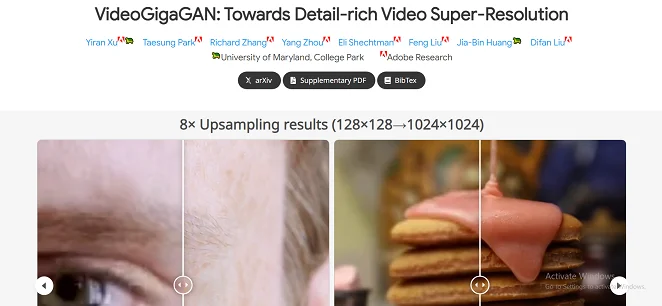

One of the reasons designers and editors choose Adobe Photoshop is because they keep launching new features. The latest feature launch is Adobe VideoGigaGAN, which is an AI feature. According to The Verge, this feature will help upscale video resolution by eight times without any distortions and flickering.

So, if you want to work on the quality and resolution of your blurry videos, we are sharing more about this feature!

Let’s uncover these topics one by one.

What is VideoGigaGAN?

This feature is designed to be better than Video Super Resolution (VSR) methods because there is no flickering. It can add details and sharpness to the videos, which was not possible before. VideoGigaGAN is basically a generative model for VSR, which upscales low-resolution and blurry videos into high-resolution videos. It does so while preserving temporal consistency and details.

The model is built on a huge image upsampler known as GigaGAN. The previous models didn’t have the ability to generate rich details. For this reason, Adobe has used temporal attention, which reduces the artifacts. In addition, they are using feature propagation and anti-aliasing.

Feature propagation is the process of adding new details and features to the videos, even if they don’t exist in the input. For instance, it can help add skin texture, feather details, hair, and others. Secondly, anti-aliasing is known as shuttling the high-frequency features (it is also known as HF shuttle).

It can be connected with After Effects and Premiere Pro to improve the low-resolution shots. However, this is only a rumor, and we don’t know if Adobe will be working on it. Companies like Blackmagic Design, NVIDIA, and Microsoft are still working on this AI technology.

How Does VideoGigaGAN Work?

This feature works by extending the asymmetric U-Net architecture of the upsampler (the image-based) because it helps handle the video data. This model has multiple components to use temporal consistency in different video frames. There is a four-step process, which we are explaining below.

- Step 1

To begin with, the upsampler will be inflated to work with videos. For this purpose, it will be integrated with temporal attention layers with the decoder blocks. As a result, the model will be able to capture and implement temporal information.

- Step 2

In the second step, the flow-guided propagation module is used to improve the temporal consistency. This will offer temporally-aware features. For this purpose, it uses optical flow estimation as well as a neural network. It helps align and integrate features across different frames.

- Step 3

In this step, the anti-aliasing blocks are used rather than regular downsampling layers. It’s done to minimize the artifacts that are incurred by downsampling processes within the encoder. The anti-aliasing blocks will implement a low-pass filter and subsampling, so there is minimal temporal flickering in the output video.

- Step 4

The last step is the application of a high-frequency feature shuttle. It helps fix the removal of high-frequency details (it happens because of anti-aliasing). It will transfer high-frequency features to the decoder. For this purpose, the skip connections are used. It tends to skip the BlurPool process, so the video has sharp details as well as textures.

What Problems Is It Solving?

Video editing can be complicated. The editors have been longing for some helpful features, and VideoGigaGAN has resolved many issues. For instance, it helps editors maintain temporal consistency across different output frames. In addition, it can help generate top-notch frames with finer details and video super resolution.

This is important because the previous models only focused on consistency, which led to blurred details. There were no realistic textures in the videos. Since VideoGigaGAN has a novel approach, it resolves both of these issues and promises temporally consistent videos. In addition, there will be detail-rich video super resolution.

Limitations of Adobe VideoGigaGAN

While this new AI feature promises exceptional video upscaling and enhanced video resolution through temporal modules, there are still some limitations. In this section, we are sharing a few limitations.

1. Inability to Handle Long Videos

It might have issues processing the extremely long videos. It happens particularly when the video has an excessive number of frames. For instance, people have encountered issues while working with videos with 200 frames or more.

2. Cannot Work on Small Details

VideoGigaGAN has issues upscaling small objects. In particular, it cannot super-resolve small objects, especially if there are fine details. Some of the examples include text, patterns, and hair.

3. Extensive Model Size

This model is huge because multiple new components have been added. Some of them include the U-Net architecture and guided propagation module. Still, there is an impressive temporal consistency.

4. Reliance on Optical Flow Accuracy

The quality of the flow-guided propagation module depends on the accuracy of optimal flow between different video frames. If the estimation of optical flow is not accurate, the temporal consistency will be an issue. Some of the common issues in this video model include occlusions, complicated scenes, and extensive motions. Having said that, there will be the possibility of inconsistencies.

Difference from Previous Models

This AI feature promises sharp textures and fine details as compared to previous models. It does it while ensuring reliable temporal consistency, even if you work with 8x upsampling. It can improve the perceived image quality as compared to 9 SOTA VSR models. These models include TTVSR and BasicVSR+ unlike previous VSR methods.

According to the qualitative results, the images have fine details, and there will be temporal coherence as well. It can achieve good results even on datasets like Vimeo-90K-T and REDS4 in PSNR/SSIM as it can enforce temporal consistency and has shown impressive temporal consistency.

The Potential

VideoGigaGAN can be used for more than upscaling the videos. That’s because it can be used to enhance archival footage and low-quality videos. It has real-time video processing features as well, so you can make corporate and family videos. In the future, content creators and editors will be able to improve the quality of their videos.

What Does AIChief Say?

We think that VideoGigaGAN is a huge step in the video upscaling niche. It uses the power of GANs and a large generative model to add fine details.

We think that this technology has the potential to evolve a lot, and it will soon be integrated into regular apps and software as well to keep severe temporal flickering at bay. All in all, the future is bright, and AIChief will be here to update you on the latest developments.

The Bottom Line

It’s safe to say that the videos are getting more attention. That’s because a few years ago, artificial intelligence wasn’t involved in video generation and manipulation. It wasn’t getting much attention.

However, these editing software solutions with AI technology will become efficient enough to be used on smartphones. Similarly, you won’t need to use ultra-resolution hardware in their cameras to shoot high-resolution videos because it already has a large-scale image upsampler.

On a concluding note, AI will expand in the video industry, and it’s surely exciting. It will change the way we shoot, edit, and watch video content. So, are you seeing how it will revolutionize video upscaling?