In today’s AI landscape, the emergence of smaller language models has captured attention, particularly for their potential to operate seamlessly on devices like smartphones. Among the latest advancements, Apple OpenELM has been introduced by Apple, an innovative set of AI models poised to revolutionize on-device AI capabilities.

This groundbreaking development will revolutionize the open research community by changing the way we interact with artificial models on our smart devices. The Apple company also releases training logs for others. This action will provide model weights, data usage, and training that help to understand its core.

What are OpenELM Models?

Apple’s OpenELm, which stands for “Open-source Efficient Language Models,” represents a significant leap in the realm of AI. These models, part of Apple’s efficient language model family, are designed to operate directly on Apple devices, offering enhanced efficiency and performance.

Compared to large language models, Apple’s Open-source efficient language models are characterized by their compact size and powerful performance. These models use a layer-wise scaling parameters strategy to efficiently allocate parameters within each layer. This layer-wise scaling strategy enhances the models’ accuracy while optimizing resource utilization.

In addition, Apple also claims that the layer-wise strategy relocates parameters within each layer. This approach offers less computational resources and increases the efficiency and performance of the AI model.

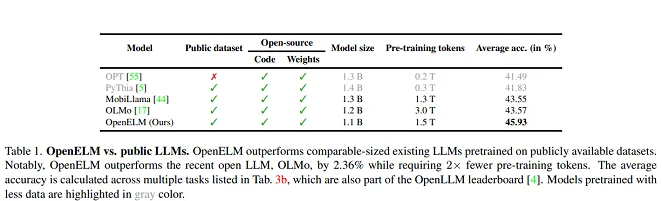

In Apple’s white paper, they also claimed that this model achieve 2.36% more accuracy than AI’s OLMo 1B, which is another small language model. In addition, the Apple’s AI also use half of the pre-training tokens as compares to OLMo 1B.

Apple’s Open Language Model Training and Data Sources

Apple’s generative AI models are trained on publicly available datasets; These models draw from a diverse pool of data, combining approximately 1.8 trillion tokens. This comprehensive dataset ensures that the models are well-equipped to handle a wide range of tasks while minimizing the risk data and model biases.

In addition, Apple’s model uses a context window that spans 2048 tokens. The model is trained on public datasets RefinedWeb, a similar version of PILE in which the duplication of data is removed.

This dataset is also a subset of two major models that are provide model weights, the first one is RedPajama and Dolma v1.6 is second. The Apple company says that these two models combines 1.8 trillion tokens of data that the AI of these models use to produce outputs.

Understanding OpenELM Models

Apple’s OpenELM models are available in eight variants, divided into two categories: Pretrained and instruction-tuned. Pretrained models offer a raw version suitable for various tasks, while instruction-tuned models are fine-tuned for specific functions like AI assistants and chatbots.

- OpenELM-270M

- OpenELM-450M

- OpenELM-1_1B

- OpenELM-3B

- OpenELM-270M-Instruct

- OpenELM-450M-Instruct

- OpenELM-1_1B-Instruct

- OpenELM-3B-Instruct

A Comparative Analysis Of Apple OpenELM With Other Models

Previously, we have discussed the Microsoft Phi-3 models, which are similar to achieving effective language processing in small AI models for local operation. Apple’s OpenELM models also have similar goals but with distinct characteristics and capabilities.

Let us compare its different aspects.

Comparing Parameters

Microsoft’s Phi-3-mini boasts 3.8 billion parameters, which truly shows its complexity. However, Apple’s OpenGL models offer a range of sizes, from 270 million to 3 billion parameters. This diversity allows for flexibility in addressing various tasks efficiently.

Meta’s Llama 3 and OpenAI’s GPT-3

Meta’s Llama 3 model leads the pack with a astonishingly 70 billion parameters, with an even larger version in the works at 400 billion parameters. In contrast, OpenAI’s GPT-3 large language models, released in 2020, featured 175 billion parameters. While parameter count reflects a model’s complexity, the Apple OpenELM is consist of 270 million to 3 billion that makes it one of the most smaller AI language models.

Transparency and Reproducibility

Apple has changed its release strategy for OpenELM by providing more than just the source code. In addition, they are sharing model weights, training logs, and inference code. This new level of transparency is intended to encourage open research and accountability in AI development.

The Data and Model Biases Of Apple’s OpenELM

Recognizing the importance of complex data and model biases, Apple has implemented appropriate filtering mechanisms and fine-tuning procedures during the training phase. By doing so, the company aims to uphold the integrity and fairness of its transformer model.

In addition, Apple also releases code for developers to convert models according to their preferences after fine-tuning. Apple’s pre-training configurations made this AI model safe and secure for everyone. This comprehensive release aims to provide an exceptional way for the open research community to make powerful small language models.

Future Implications and Open Research

As Apple devices evolve to incorporate on-device AI features, the potential impact of OpenELM extends beyond consumer applications. With its complete framework and enhanced accuracy, OpenELM is advancing open research endeavors in AI language models.

AIChief’s Remarks

Based on the above debate, AIChief is optimistic about future developments in this open-source AI model. This open-source efficient language model represents a standard shift in the world of on-device AI models. By providing a comprehensive suite of tools and resources, Apple empowers researchers and developers to explore new opportunities in AI while ensuring transparency and accountability every step of the journey.