Dioptra AI Alternatives

Dioptra AI

Top 10 Writeless AI Alternatives

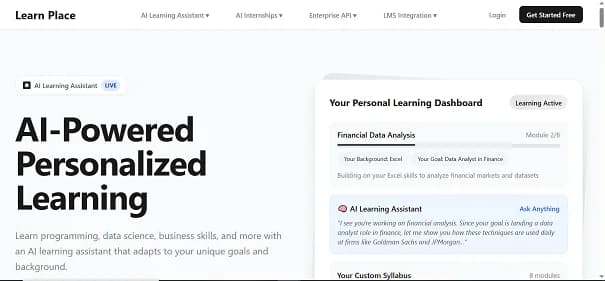

1. Learn Place AI

2. Grokipedia

3. CopyCreator

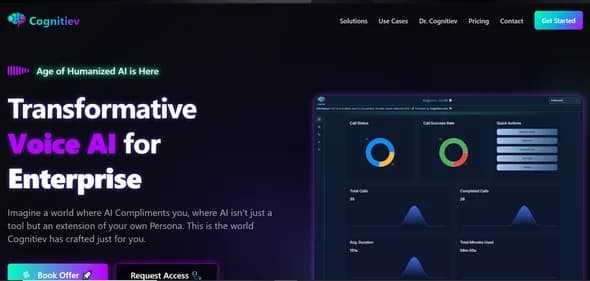

4. Cognitiev PRO

5. Creator Supply

6. Creative Magic Panel

7. Creativ.io

8. CreateWebsite io

9. Craiyon AI

10. CoverLetterBuddy

List of Dioptra AI Alternatives

Learn Place AI

<p>Learn Place is an AI-powered personalized learning platform designed for students, professionals, and lifelong learners who want smarter education experiences. It helps users build skills in programming, data science, business, and more through adaptive learning powered by artificial intelligence. The platform’s AI Learning Assistant creates a custom syllabus based on your background, goals, and motivation, helping you focus on what matters most while skipping what you already know.</p> <p>Unlike traditional online courses that follow fixed structures, Learn Place adapts dynamically to your progress and performance. Users can ask questions, practice through interactive exercises, and even upload personal content to guide their own learning journey. The clean, web-based interface ensures a smooth experience across all subjects, while the freemium pricing model makes advanced, AI-driven education accessible to everyone. With features like NLP-based tutoring and adaptive syllabus generation, it functions as a 24/7 personal mentor for every learner.</p> <p>Learn Place stands out for its contextual learning and tailored approach, but some users may prefer more visual or instructor-led options. Exploring Learn Place alternatives can help find similar adaptive learning tools with richer video lessons, offline access, or broader subject libraries.</p>

Grokipedia

<p>Grokipedia is an AI-powered online encyclopedia created by xAI to redefine how knowledge is generated and maintained. Built on the Grok language model, it automates article writing, fact-checking, and editing, aiming to provide faster updates and reduced editorial bias compared to traditional platforms. Launched in October 2025 with around 885,000 entries, Grokipedia offers users a fresh, dark-themed interface and an AI-curated approach to information discovery that feels modern and efficient.</p> <p>Designed for researchers, students, and curious readers, Grokipedia serves as a quick reference hub where information evolves continuously through AI input rather than manual editing. Its goal is to deliver a dynamic, alternative encyclopedia that balances speed with accessibility. However, some articles show errors or close resemblance to Wikipedia content, reflecting both the potential and the early-stage limitations of AI-driven knowledge systems. Despite this, it’s gaining attention for its innovative model of automated knowledge curation.</p> <p>Grokipedia stands out for its speed, simplicity, and AI-first design philosophy. Still, users seeking higher reliability, verified sourcing, or broader coverage may want to explore Grokipedia alternatives that combine AI automation with expert human review for more balanced accuracy and depth.</p>

CopyCreator

CopyCreator is an AI-powered writing assistant designed to simplify content generation for individuals, freelancers, and businesses. It creates a wide variety of text formats including blog posts, product descriptions, social media captions, and marketing copy. With an easy-to-use interface, users can input prompts and quickly receive polished, structured content that fits their desired tone. This makes it a practical option for those who want to save time while maintaining consistent output across channels. The platform leverages natural language processing and contextual understanding to adapt content styles, ensuring the generated material feels relevant and engaging. CopyCreator also includes customization features that allow users to match their brand voice and produce content tailored to their goals. A free trial makes it accessible for first-time users, while the paid plan unlocks unlimited generation and priority support. Although CopyCreator is versatile, it lacks deeper integrations and mobile accessibility, which some businesses may find limiting. For teams seeking advanced collaboration or more specialized long-form capabilities, exploring alternatives to CopyCreator can provide stronger options.

Cognitiev PRO

Cognitiev PRO is an AI-powered platform built to support users across technical, creative, and educational tasks. It integrates advanced language models like GPT-3.5 and GPT-4, offering more than 20 specialized chat modes and over 150 prompts to cover diverse scenarios. Developers benefit from its Ultimate Coder mode, which provides code analysis, debugging, and improvement suggestions, while writers and storytellers can craft engaging narratives using creative writing features. Students gain access to book and movie explainers for learning support, and entrepreneurs can explore AI-generated business planning through specialized CxO personas. Artists also find value in its art analysis modes. The platform emphasizes privacy by storing data locally and enhances accessibility with a Progressive Web App that works seamlessly across devices. Its versatility makes Cognitiev PRO appealing to individuals who want one tool to handle both professional and creative needs. However, users seeking broader integrations or free access may need alternatives. Exploring other AI assistants alongside Cognitiev PRO ensures finding the best fit.

Creator Supply

Creator Supply is an AI content ideation platform designed to simplify campaign planning for creators, marketers, and brand managers. The platform transforms minimal input—such as audience, product, or platform—into complete creative briefs that include messaging hooks, tone guidance, and structured content outlines. Its strength lies in blending natural language generation with marketing insights, allowing users to produce highly relevant ideas tailored to each channel. From UGC scripts and TikTok trends to Instagram ad concepts, Creator Supply makes it easy to generate fresh, audience-aligned ideas in minutes. Its intuitive interface and quick outputs save time while maintaining strategic depth, making it useful for freelancers, agencies, and in-house teams. Users benefit from persona modeling, platform-specific suggestions, and direct export options to tools like Notion and Docs. While the tool excels at ideation and brief generation, some teams may require deeper analytics, post-launch tracking, or advanced creative integrations. In those cases, exploring Creator Supply alternatives can help find a more comprehensive solution.

Creative Magic Panel

Creative Magic Panel is an advanced Photoshop extension that brings AI technology into photo retouching, making complex edits faster and easier. Designed for photographers, designers, and editors, it simplifies time-consuming processes such as skin retouching, body reshaping, and color correction. The panel includes AI-powered face and body recognition, smart frequency separation, and customizable overlays, giving users precision while saving valuable editing time. Its intuitive interface allows even beginners to achieve professional-quality results without needing advanced Photoshop expertise. Compatible with both Mac and Windows, Creative Magic Panel integrates seamlessly into existing Photoshop workflows. With features like batch editing, luminosity masking, color grading, and image correction filters, it provides tools for both creative enhancements and technical refinements. The one-time pricing model makes it a cost-effective option compared to recurring subscription-based tools. While Creative Magic Panel is powerful for Photoshop users, some may prefer alternatives that work as standalone apps or mobile-friendly AI editors. Exploring other options alongside Creative Magic Panel ensures users choose the best tool for their editing style and needs.

Creativ.io

Creativio AI is an AI-powered design platform that transforms basic product photos into professional visuals optimized for sales and marketing. It uses artificial intelligence to automate editing tasks like background removal, lighting adjustment, and scene generation, creating polished images in just seconds. The platform caters to e-commerce sellers, marketers, influencers, and small business owners who want to improve their online presence without investing in costly photography or editing services. With tools for image upscaling, text annotations, and batch processing, Creativio AI offers a versatile solution for producing high-quality visuals at scale. Its one-time payment model makes it more affordable compared to monthly subscription-based platforms, especially for entrepreneurs seeking long-term value. The interface is intuitive, giving users the ability to generate studio-like results without advanced design skills. While Creativio AI excels in simplicity and affordability, alternatives like Canva, Pixelcut, or Remove.bg may appeal to users who want mobile apps, deeper editing flexibility, or collaborative features. Exploring these options alongside Creativio AI helps users find the best solution for their creative needs.

CreateWebsite io

CreateWebsite.io is an AI-powered website builder that simplifies the process of building professional websites without coding skills. It uses AI to generate responsive designs, SEO-friendly content, and customizable templates, helping users quickly launch online platforms for businesses, portfolios, blogs, or e-commerce. The tool is built for entrepreneurs, freelancers, nonprofits, and small businesses who need a cost-effective way to create websites that look professional and perform well. Its features include an AI design assistant, integrated content generation tools, SEO optimization, and real-time editing. Users can start with a free plan that covers basic needs, while premium plans unlock unlimited pages, custom domains, and advanced hosting benefits. The platform is intuitive, offering responsive templates and a drag-and-drop interface that makes it suitable for beginners while still useful for professionals. While CreateWebsite.io is affordable and user-friendly, alternatives like Wix, Squarespace, or Framer may appeal to users needing more advanced customization, marketing integrations, or e-commerce capabilities. Exploring different options ensures you select the right builder for your specific website goals.

Craiyon AI

Craiyon AI is a free web-based text-to-image generator that transforms written prompts into unique visuals within seconds. Previously known as DALL·E mini, it uses diffusion models to interpret natural language and generate nine different images per request. The platform is popular among artists, marketers, educators, and creators who need fast concept visuals without investing in expensive design tools. The tool offers unlimited prompts, making it highly accessible for experimentation and everyday creativity. Free users can generate images at 256×256 resolution with upscaling available, while paid plans unlock faster rendering, higher resolution, watermark-free downloads, and private image generation. This flexibility allows casual users to explore AI art freely while offering more control for professionals. Craiyon AI stands out for its simplicity and open access compared to other text-to-image generators, but results may sometimes lack polish or detail found in premium tools. For those seeking higher-quality output, mobile apps, or deeper customization, exploring alternatives alongside Craiyon AI can help you find the most suitable image generation platform for your needs.

CoverLetterBuddy

CoverLetterBuddy is an AI-powered assistant that helps job seekers create personalized cover letters tailored to each application. By analyzing resumes, job descriptions, and role-specific details, it generates professional letters that highlight relevant skills and align with career goals. With its free and paid tiers, the tool works equally well for occasional applicants and active job hunters submitting multiple applications every week. The platform is designed for simplicity. Its interface is clean, beginner-friendly, and mobile-ready, allowing quick edits and instant downloads. Users can customize tone, regenerate drafts, and adjust content to match their personal voice. Resume parsing and contextual matching ensure each cover letter feels specific and targeted, rather than generic. For students, career changers, and professionals applying globally, it saves significant time while boosting confidence in applications. CoverLetterBuddy offers a free plan to test the platform, while paid tiers unlock higher limits and advanced customization. With affordable pricing, it is an accessible option for most users. Still, job seekers may also want to explore alternatives to CoverLetterBuddy for broader career support.

CoverLetter-AI

CoverLetter-AI is a web-based platform that creates personalized, professional cover letters in minutes. By entering details such as your name, role, company, and job description, the tool instantly generates a polished draft that is ready to use. It’s designed to help first-time applicants, freelancers, and career professionals quickly produce tailored documents that align with their applications. Unlike subscription-based services, CoverLetter-AI works on a lifetime credit system where one credit equals one cover letter. Users receive a free starting credit and can purchase additional packs with credits that never expire. This makes the tool flexible and cost-effective for both occasional users and high-volume applicants. The interface is simple and efficient, offering preview options without requiring signups. Its AI engine uses contextual prompts and NLP to ensure each letter feels unique and relevant. With instant generation and support for multiple roles or industries, CoverLetter-AI helps users save time while maintaining quality. Although powerful, it lacks ATS keyword optimization and advanced editing features. For applicants who need deeper customization, exploring alternatives to CoverLetter-AI may provide additional value.

CoverLetter GPT

CoverLetter GPT is an AI-powered platform that helps job seekers craft professional cover letters in minutes. Built on GPT-4 Turbo, it generates polished, job-ready drafts by asking for simple inputs such as job title, company, and relevant experience. The tool is designed for speed and convenience, making it a useful option for students, freelancers, and professionals who apply to multiple roles at scale. Users can edit generated drafts before exporting them in PDF or Word (DOCX) format. Unlike many subscription-based services, CoverLetter GPT works on a one-time payment model, offering bundles of letters that start as low as $0.99. This makes it an affordable and practical choice for applicants who don’t want ongoing costs. The clean, straightforward interface ensures a smooth experience, even for beginners. While it’s a strong option for quick and affordable cover letters, the platform is focused solely on letter writing—there’s no resume builder or job tracking. For users looking for more comprehensive career tools, exploring alternatives to CoverLetter GPT may be beneficial.

Corti AI

Corti AI is a real-time voice intelligence platform designed to support healthcare professionals and emergency dispatchers during live calls. It analyzes conversations as they happen, detecting patterns and identifying critical symptoms like cardiac arrest. The platform provides on-screen guidance to improve decision-making instantly. Beyond live support, Corti serves as a post-call coaching tool, helping teams refine communication, response time, and accuracy. Built with medical-grade precision, it integrates seamlessly with call centers and clinical workflows, continuously learning from every interaction to enhance patient outcomes. The platform is best suited for emergency dispatchers, healthcare professionals, call center QA teams, and hospital systems. Key features include real-time voice analysis, AI symptom detection, cardiac arrest recognition, on-screen prompts, post-call coaching, QA workflow integration, performance dashboards, HIPAA-compliant data handling, customizable medical models, and seamless call center integration. These tools help professionals respond faster, reduce errors, and improve training while maintaining privacy and compliance standards. Corti AI empowers teams to make data-driven decisions during critical moments. Corti AI is not free and uses a custom enterprise pricing model based on deployment size, number of users, and integration requirements. While it excels in real-time analysis and clinical support, alternatives may provide more accessible options for smaller teams or self-serve plans. Exploring other AI voice intelligence platforms can help organizations find solutions that meet their workflow and budget needs.

CorrectEnglish

CorrectEnglish is an AI-powered writing assistant that goes beyond simple grammar checks. It analyzes spelling, punctuation, sentence structure, and style in real time, offering contextual guidance to make your writing clear, professional, and error-free. With built-in vocabulary suggestions and plagiarism detection, it helps ensure originality and credibility in every draft. The platform is highly versatile, available on web, browser extensions, and mobile apps, making it accessible for students, professionals, and writers on the go. Whether you’re drafting essays, business emails, resumes, or blog content, CorrectEnglish adapts to your context with accuracy. Its writing templates (MLA, APA, and more) make it particularly useful for academic and research purposes. A key advantage of CorrectEnglish is its ESL support, providing helpful explanations and educational tools for non-native speakers to learn as they write. For job seekers, it simplifies the process of crafting polished resumes and cover letters. While the free plan covers essentials, advanced features like plagiarism scanning and unlimited word checks are part of the paid tiers. With flexible pricing, CorrectEnglish is a practical solution for anyone aiming to improve written communication.

Copylime

Copylime is an AI-powered writing assistant designed for speed and simplicity, helping users generate SEO-friendly content in seconds. It focuses on creating structured blog posts, product descriptions, and website copy without unnecessary complexity. By entering a keyword or topic, users can instantly receive well-formatted text that includes strategic keyword placement, making it especially useful for marketers aiming to boost search rankings. The platform offers flexible pricing, including a free plan for beginners, pay-as-you-go word packs for occasional users, and an affordable unlimited monthly plan for high-volume creators. Its clean interface ensures even non-technical users can produce polished content quickly. This makes Copylime an attractive option for small business owners, freelancers, and agencies needing scalable writing support. While Copylime excels at speed and affordability, it has fewer integrations and advanced editing tools compared to more robust platforms. For users requiring collaboration features, deeper customization, or long-form storytelling, exploring alternatives to Copylime may provide a better fit depending on specific content strategies.

ConvertRocket AI

ConvertRocket AI is an AI-powered conversion optimization platform built to help businesses automate engagement, capture leads, and improve performance reporting. It combines unlimited chatbot capabilities with real-time analytics, giving teams the ability to interact with customers instantly and track meaningful KPIs. By deploying conversational chatbots on websites, companies can guide visitors, qualify leads, and optimize sales funnels while maintaining a seamless user experience. The platform also provides automated reports that measure engagement, conversion rates, and message flows, making it easier for teams to identify strengths and weaknesses in their funnels. Its one-click setup and intuitive interface make it accessible to startups, small businesses, and agencies looking for affordable ways to scale marketing and sales efforts. At $49 per month for unlimited access, ConvertRocket AI offers strong value, but it lacks a free plan and advanced integrations found in enterprise solutions. ConvertRocket AI is a practical choice for teams wanting hands-off conversion optimization, but exploring alternatives ensures you select the best solution for your business needs.

Control Audits

Control Audits is a cybersecurity and governance, risk, and compliance consulting firm based in Auckland, New Zealand. The company specializes in helping businesses strengthen their security posture while meeting industry standards such as ISO 27001, NIST Cybersecurity Framework, and Essential 8. Its services include IT security audits, governance frameworks, third-party cyber risk management, and business continuity planning. The firm also provides expertise in AI governance and security, which is becoming increasingly important as organizations adopt advanced technologies. Designed for startups, small businesses, and established enterprises, Control Audits tailors its services to meet unique needs. By combining in-depth assessments with expert consultation, the company ensures that organizations can identify vulnerabilities, mitigate risks, and stay compliant with regulations. Its focus on local markets in New Zealand and Australia makes it a trusted partner for businesses in regulated industries. Control Audits is a strong choice for companies seeking comprehensive cybersecurity and compliance solutions. Still, businesses may want to explore alternatives that offer different pricing models or additional specialized services.

ContextQA

ContextQA is an AI-powered test automation platform built to make software testing faster, more accurate, and less resource-intensive. Designed with a no-code and low-code approach, it enables teams to create, manage, and execute tests without deep programming expertise. By combining AI features like auto-healing, visual regression testing, and root cause analysis, ContextQA reduces maintenance overhead while improving reliability across applications. The platform supports multiple testing types including UI, API, mobile, and cross-browser testing, making it versatile for startups, enterprises, and product teams. It integrates seamlessly with CI/CD pipelines and offers real-time reporting to ensure quality remains at the center of software delivery. QA engineers, developers, and product managers benefit from its accessible interface and analytics, while businesses gain confidence in releasing updates more efficiently. Although ContextQA provides a free plan, advanced features are locked behind higher-tier pricing, which may be less suitable for smaller businesses. ContextQA is a robust solution for modern test automation, but exploring alternatives can help teams find the best fit for their unique development workflows.

ContentDrips

ContentDrips is a content creation platform designed to simplify personal branding and social media growth. It provides customizable templates, carousel builders, and AI-powered writing tools that help users design and publish posts without requiring design expertise. The platform is especially popular among LinkedIn creators, offering a smooth workflow for crafting engaging carousels, text posts, and visuals that drive audience interaction. For marketing agencies and social media managers, ContentDrips supports team collaboration and brand management, making it easier to handle multiple clients. Freelancers and coaches also benefit from its plug-and-play interface, while SaaS companies use it to share updates and stories in professional formats. Its AI-driven post writer and scheduling features make content production efficient, consistent, and scalable. Although ContentDrips offers an accessible free plan, its advanced features remain locked behind paid subscriptions. The platform is best suited for creators focusing on LinkedIn, while those seeking wider publishing capabilities or deeper analytics may prefer other options. Exploring alternatives to ContentDrips can reveal tools better tailored to diverse social media strategies.

Content Redefined

Content Redefined is an AI-powered writing platform built to automate and scale content creation with a focus on SEO. It uses GPT-4o and proprietary AI models to generate, rewrite, and polish text that feels natural and publication-ready. From blog posts and landing pages to product descriptions, it delivers structured, optimized content across different formats. The tool is designed for efficiency. It automatically edits and formats output, reducing the need for manual revisions before publishing. This makes it particularly valuable for professionals handling bulk content, such as marketers, agencies, and e-commerce teams. With usage-based pricing, it scales easily from individual bloggers to enterprise-level teams producing thousands of words each month. Content Redefined stands out for its combination of speed, simplicity, and built-in optimization features. However, it does not include collaboration tools or advanced formatting controls, which some teams may need. For businesses seeking more flexibility, integrations, or creative customization, exploring alternatives to Content Redefined can uncover tools better suited to specific workflows.

Content Codex

Content Codex is an AI-powered content strategy platform that helps creators, marketers, and businesses plan smarter campaigns with ease. Instead of starting from scratch, users can generate full blueprints that include suggested topics, business ideas, and structured content strategies. Powered by advanced models like GPT-4 Turbo, the tool delivers actionable insights in minutes, saving time while maintaining quality. Its clean dashboard makes strategy development simple, offering options for branded PDF exports, topic discovery, and AI-driven idea generation. Freelancers can provide client-ready reports, while startups and agencies benefit from scalable features such as white-labeling and API access. Whether you need a quick content outline or a 50-page marketing strategy, Content Codex adapts to your needs with flexible credit-based plans. The platform is ideal for individuals seeking structure, small businesses wanting affordable strategy support, and larger teams requiring automation at scale. However, some users may prefer tools with real-time collaboration or CMS integration. This makes exploring alternatives to Content Codex worthwhile for finding the right fit.

Content Assistant

Content Assistant is an AI-powered writing platform built to help content teams create, optimize, and manage branded communication with ease. It transforms the way marketers, agencies, and startups handle copywriting by combining natural language processing with machine learning. The tool can generate blog posts, landing pages, social copy, and even internal documents while adapting to brand guidelines for consistent tone and messaging. Beyond generating fresh content, Content Assistant also works as an editor. It rewrites drafts, provides style suggestions, and offers SEO-friendly improvements that save time for writers and strategists. Real-time collaboration features make it ideal for teams that need a unified workspace. Its intuitive interface keeps the workflow simple, while tiered pricing ensures accessibility for individuals, growing teams, and larger organizations. What makes Content Assistant stand out is its balance between creativity and structure. It serves as both a copywriter and an operational tool for scaling content output. Still, users seeking more advanced analytics, integrations, or multilingual strength may benefit from exploring alternatives to Content Assistant.

Contaact Card

Contaact Card is a digital business card platform that replaces traditional paper cards with personalized virtual alternatives. It allows individuals and organizations to design and share branded cards that include contact details, job roles, websites, and custom branding. Cards can be delivered via email, QR code, or direct link, making sharing simple and app-free. The platform is designed for professionals, sales teams, and businesses of all sizes looking to modernize networking. Entrepreneurs can showcase their brand identity, small teams can scale distribution across employees, and enterprises can personalize onboarding or event experiences. Contaact Card’s AI-driven personalization engine ensures each card feels professional and unique. With flexible pricing that starts at only one dollar per card, it offers an affordable entry point for individuals while still supporting bulk packages for larger teams. Although it does not include advanced analytics or a mobile app, it remains a practical option for digital-first networking. Those seeking more advanced features may want to explore alternatives to Contaact Card to find the best match.

ConnectFlux AI

ConnectFlux AI is an AI-powered platform designed to streamline lead generation and email outreach for sales teams, marketers, and recruiters. The tool provides access to over 200 million business records, allowing users to search, unlock, and export verified email addresses using advanced filters. Beyond a contact database, it integrates AI-driven content personalization, automated campaign management, and inbox monitoring. Users can run unlimited campaigns, generate cold emails using built-in AI tools, and track responses efficiently. ConnectFlux AI helps teams reach decision-makers faster while reducing manual work, improving productivity and conversion rates. The platform stands out with AI-powered lead scoring, automated email flows, and bulk export capabilities. It supports high-volume campaigns, unlimited inbox integrations, and content generation up to 1.4 million characters monthly. ConnectFlux AI is ideal for B2B sales teams, solo founders, SaaS growth marketers, recruiters, and agencies seeking scalable lead generation solutions. Its clean dashboard and no-code interface simplify complex outreach processes and enable hyper-personalized campaigns with minimal effort. While ConnectFlux AI offers extensive features, it currently supports only English and lacks native CRM integration. For teams seeking alternative solutions, exploring other AI lead generation platforms can reveal tools better suited to specific outreach goals, budget requirements, or multilingual needs.

Computerender

Computerender is an AI-powered platform built to help developers integrate Stable Diffusion models into applications with ease. It supports both text-to-image and image-to-image generation, enabling the creation of diverse visual content across industries. By distributing GPU requests globally, the platform ensures fast performance, stable results, and cost-effective scalability. Designed with simplicity in mind, Computerender offers a developer-friendly API and clear documentation, making it easier for teams to adopt AI image generation without complex infrastructure. Startups can use it to enhance user experiences with dynamic visuals, while designers can generate graphics from text prompts or existing images. Researchers and educational institutions also benefit from its accessible testing environment and free tier, which is useful for experimentation. The platform stands out for its global GPU distribution, flexible pricing, and reliable support channels. While it is highly effective for image generation, users seeking broader AI capabilities may prefer alternatives with multi-modal features. Exploring other options alongside Computerender can help identify the right fit for specific development goals.

Compara.cat

Compara.cat is an AI-driven product comparison platform that helps consumers make better purchasing decisions by analyzing thousands of user reviews. Instead of overwhelming shoppers with raw opinions, it filters out biased and fake content to deliver clear, trustworthy summaries. Users simply provide a product link, and the platform generates side-by-side insights highlighting strengths and weaknesses, making complex choices simple. The tool is available in Catalan, Spanish, and English, making it accessible to a wide audience. With its web interface, mobile apps, and Chrome extension, Compara.cat ensures that comparisons are available on any device. From parents searching for reliable baby products to tech enthusiasts exploring gadgets, the platform adapts to different shopping needs. Its real-time data processing keeps results fresh, while multilingual support makes it a strong option for diverse communities. Since Compara.cat is completely free, it stands out as a highly accessible alternative to paid review analysis tools. However, shoppers looking for broader product coverage or advanced recommendation features may want to explore alternatives for added flexibility.

Colorway AI

Colorway AI is a browser-based design assistant that transforms text prompts into editable UI designs in seconds. With its Figma-first approach, it generates layouts that follow real design logic, including structure, hierarchy, and branding rules. Instead of starting from a blank canvas, users can describe their vision and instantly see it turned into a working mockup. This makes it especially useful for startup founders, product teams, and non-designers looking to accelerate the creative process without needing deep design expertise. One of Colorway AI’s strongest benefits is its seamless Figma integration. Every generated design is editable, giving users full control after the AI lays the foundation. The platform includes features like responsive layouts, dark mode styling, brand tokens, and reusable components. Teams can collaborate on prototypes, refine brand-specific templates, and quickly move from idea to publish-ready visuals. With flexible pricing and a free plan, it provides an accessible entry point for solo makers and growing companies alike. Compared to traditional design tools, Colorway AI saves hours of prototyping while making professional design more approachable. Its focus on speed and usability makes it stand out, though alternatives may offer deeper interaction design or UX flow capabilities. For businesses exploring design automation platforms, comparing Colorway AI with other AI-powered UI tools ensures the right balance of speed, flexibility, and creative freedom. Exploring alternatives helps identify which solution aligns best with your design and collaboration needs.

ColoringMaker

ColoringMaker is an AI-powered web app that creates printable coloring pages from user prompts, sketches, or uploaded drawings. Using text-to-image models and vectorization AI, it instantly transforms ideas into clean, black-and-white line art suitable for kids, classrooms, or creative projects. Users can enter prompts like “space dinosaurs” or upload a drawing, and the system quickly generates polished designs ready to print. This makes it a valuable tool for parents, teachers, and Etsy sellers who want fast, custom artwork. The platform is easy to use, with instant previews, multiple style choices, and options to edit line thickness or format. Educators can design curriculum-themed worksheets, while digital creators can produce coloring books for commercial resale. The free plan allows limited generations, while credit packs and subscriptions unlock unlimited downloads, commercial rights, and premium features. While ColoringMaker excels at fast, creative page generation, some users may prefer mobile apps or tools offering broader customization. Those seeking more flexible design workflows should explore alternatives to ColoringMaker to find the right fit.

Cmd Haus

Cmd Haus is an AI-powered command center platform designed to optimize business operations through automation, data analysis, and real-time insights. It centralizes workflows, making it easier for teams to collaborate, track progress, and align on performance goals. By automating repetitive tasks, Cmd Haus helps reduce manual effort, increase efficiency, and improve decision-making across departments. The platform supports workflow automation, customizable dashboards, and advanced analytics that deliver actionable insights instantly. With seamless integration into existing business systems, Cmd Haus ensures companies can unify data sources without disrupting established processes. Its mobile app and web-based access make it convenient for executives, operations managers, and project teams to stay connected and informed anywhere. What makes Cmd Haus stand out is its ability to provide real-time decision support alongside collaboration tools that adapt to businesses of all sizes. While it offers scalable solutions, pricing is available only through custom quotes. For businesses exploring ways to optimize workflows, comparing Cmd Haus with alternative automation and analytics platforms ensures the best operational fit.

Claud Dreams

Claud Dreams is an AI-powered mobile app designed to help users record and interpret their dreams. Through the use of AI models, the app analyzes symbolic elements of each dream and offers personalized insights. Perfect for introspective users and those interested in dream psychology, Claud Dreams allows individuals to track and reflect on their dreams without the need for complex journaling. It uses a coin-based system for interpretation, where users pay per interpretation, making it a flexible option for those who prefer occasional use rather than a subscription model. The app's minimalist interface is calming and designed to enhance the pre-sleep or post-sleep experience. Claud Dreams offers AI-powered dream interpretation, symbolic context extraction, and supports both short and long entries. While the app is free to download and use as a basic journal, the dream analysis requires purchasing coins. However, frequent users may find themselves needing to purchase coins regularly, and the app is currently only available on iOS. For those seeking more advanced tracking or clinical analysis, exploring alternatives could be beneficial.

Cladwell

Cladwell is an AI-powered wardrobe app. It helps you plan daily outfits using your existing clothes. This tool is ideal for style-conscious minimalists, busy professionals, and eco-minded individuals. It promotes intentional fashion and reduces overbuying. Cladwell focuses on creating a functional capsule wardrobe. Users upload their wardrobe items, and the AI suggests daily outfits. These suggestions are tailored to preferences, weather, and goals. Key features include an AI outfit recommendation engine and weather-based suggestions. It also offers a closet logging system and style analytics. Cladwell stands out by promoting sustainable fashion and reducing decision fatigue. However, other wardrobe management apps like Stylebook, Pureple, or Combyne also offer similar functionalities. These alternatives may provide different outfit planning features, style analytics, or community engagement options. Exploring various platforms can help users find the best wardrobe app for their specific fashion and organizational needs.

Chinese Feng Shui Online

Chinese Feng Shui Online is a digital platform blending classical Chinese metaphysics with modern tech. It delivers personalized Feng Shui insights. This tool is ideal for spiritual seekers, homeowners, and entrepreneurs. It also benefits interior designers and families. It brings traditional wisdom to a global audience. Chinese Feng Shui Online uses birth data for BaZi analysis. It maps energy patterns, lucky directions, and room arrangements. Key features include personalized BaZi destiny charts and energy flow/Feng Shui reports. It also offers lucky directions and auspicious dates. AI-assisted calculations provide accessible delivery of insights. Chinese Feng Shui Online stands out by combining ancient wisdom with AI-assisted calculations for personalized guidance. However, other Feng Shui apps or astrology platforms like AstroSage, Sanctuary AI, or Co-Star also offer similar functionalities. These alternatives may provide different divination methods, integration with personal calendars, or community features. Exploring various platforms can help users find the best metaphysical tool for their specific spiritual and decision-making needs.

Checklist.gg

Checklist.gg is an AI-powered platform that helps teams create, manage, and execute checklists and standard operating procedures efficiently. It uses advanced algorithms to generate custom checklists from inputs, documents, or links, making it adaptable to different industries. With real-time collaboration features, teams can assign tasks, track progress, and maintain accountability across projects. Its user-friendly interface ensures that even non-technical users can streamline workflow processes with ease. The platform stands out by offering customizable templates, recurring schedules, and integration with popular tools, making it a reliable hub for operations. Project managers can coordinate tasks seamlessly, HR teams can optimize onboarding, and compliance officers can create detailed SOPs to meet regulatory standards. Affordable pricing and role-based access controls make it accessible for small teams while still scalable for enterprises. Although Checklist.gg is powerful, some businesses may prefer alternatives that provide offline access, mobile apps, or more advanced customization. Exploring other platforms alongside Checklist.gg ensures organizations find the most suitable tool for their unique workflow needs.

Chatzap

Chatzap is an AI-powered sales chatbot built to increase engagement and boost conversions for SaaS companies and online businesses. It analyzes your website content to create personalized responses that guide visitors through their journey, improving customer experience while capturing leads. The platform requires no coding, making setup simple and accessible for businesses of all sizes. With multilingual support across 95 languages, Chatzap helps brands reach wider audiences and serve international customers effectively. The tool offers valuable features such as lead capture, customizable chat widgets, and real-time chat logs, providing insights for better follow-up. Integration with Shopify allows eCommerce businesses to improve product discovery and sales automation without needing separate tools. Its affordability and user-friendly design make it especially appealing to startups and small businesses seeking practical automation solutions. While Chatzap is powerful, some users may look for alternatives offering advanced analytics, API integrations, or broader platform compatibility. Exploring other chatbot platforms alongside Chatzap can help businesses find the best solution for their needs.

ChattyGPT

ChattyGPT is a mobile-based AI chat assistant designed to provide smart, conversational experiences through an intuitive interface. Ideal for casual users, students, and anyone curious about AI, the app allows users to interact with an AI chatbot that can answer questions, assist with writing tasks, and engage in natural conversations. Whether you're seeking quick answers or just someone to chat with, ChattyGPT offers a friendly and responsive AI experience on your phone. The app includes multiple in-app purchase tiers, allowing users to unlock advanced capabilities such as smarter AI engines, premium assistants, and extended chat functionality. ChattyGPT's pricing starts as low as $0.29 for entry-level upgrades and can go up to $59.99 for more advanced features. While the app is perfect for personal productivity and entertainment, it’s not geared toward enterprise use or advanced professional tasks. However, ChattyGPT lacks a full-featured subscription and requires multiple purchases for different functionalities. It’s also limited to mobile use, with no desktop or web version available. For users seeking more robust professional tools, considering alternatives may be a good option.

Chatty Tutor

Chatty Tutor is an AI-powered language learning tool designed to help English learners improve fluency through interactive and personalized practice. It combines dialogue shadowing, vocabulary training with AI-generated images, and pronunciation assessments, offering a hands-on approach that keeps learners engaged. With customizable features such as AI prompts, voice settings, and speech speed, users can create a learning journey tailored to their needs. The platform is available on macOS and web browsers, ensuring flexibility for students, professionals, and self-learners alike. What makes Chatty Tutor stand out is its use of AI-driven visuals and dialogue tools that replicate real conversational practice. Learners benefit from accurate pronunciation feedback, topic-based chats, and synchronized chat history, creating a structured yet flexible environment for continuous progress. Educators can also use it as a supplement to traditional methods, giving students more opportunities to practice outside class. While Chatty Tutor is effective, some users may seek different features like multi-language support or free access. That makes it useful to explore alternatives to Chatty Tutor for language learning.

Chatsome

Chatsome is an AI-powered chatbot platform built to help businesses automate customer service and sales conversations. It allows companies to create branded chatbots without coding, making it accessible for startups, enterprises, and agencies. With natural language processing and GPT integration, Chatsome chatbots deliver accurate and contextual responses, enhancing customer satisfaction while reducing support workload. The platform is designed for scalability, supporting everything from small online shops to large enterprises handling thousands of conversations daily. Businesses can train chatbots on their own data, customize them to match brand identity, and deploy them within minutes. Chatsome also handles order information, product queries, and lead generation, making it valuable for e-commerce and customer support teams. Its pricing structure starts at $398 per month, with premium and enterprise options available for higher message volumes and advanced integrations. While powerful, the absence of a free trial may limit smaller businesses. Chatsome is a strong option for customer automation, but exploring alternatives can help identify the right solution for specific business needs.

ChatScribe Pro

ChatScribe Pro is an AI-powered transcription and content generation platform designed for creators, professionals, and global teams. It allows users to upload or record audio and video files, which are transcribed with up to 98 percent accuracy. Beyond transcription, the platform supports translation into more than 100 languages, making it valuable for international communication. What makes ChatScribe Pro stand out is its combination of transcription, translation, and AI writing features. It integrates advanced models like GPT-4, Claude, Gemini Pro, and LLaMa-3.1 to generate articles, blog posts, and social media updates. This ensures users can not only capture spoken content but also repurpose it into ready-to-publish material. Additional features such as speaker time analysis, sentiment detection, and a multilingual chatbot make it especially versatile. The platform is available via a freemium model with paid plans starting at just five dollars per month. While powerful, professionals may find that exploring alternatives helps identify the best transcription and content creation tool for their specific needs.

ChatPhoto

ChatPhoto is an AI-powered app that converts images into text and delivers interactive insights. It allows users to ask questions about photos and receive detailed answers, making it more than a simple OCR tool. From generating social media captions to identifying landmarks and translating signs, it adds context and meaning to visual content. The app supports over 56 languages, making it useful for travelers, language learners, and international users. Content creators can generate captions and stories directly from photos, while e-commerce sellers can create product descriptions from images. Educators and students also benefit by extracting knowledge from educational visuals with ease. Key features include image-to-text conversion, interactive Q&A, caption generation, and object recognition. Users can even get recipe ideas from food photos or fashion suggestions from outfit images. Its iOS interface is intuitive and designed for personal and professional use. While ChatPhoto offers a free tier with limited daily credits, premium plans provide unlimited access. For broader compatibility and features, exploring alternatives may also be worthwhile.

Chathero

Chathero is an AI-powered chatbot platform designed to help businesses automate conversations and improve customer experiences with ease. It enables companies to set up intelligent chatbots without coding, making it accessible to small teams and large organizations alike. With its user-friendly interface, businesses can design custom chatflows and manage all interactions from one inbox. Chathero supports integration with platforms like WhatsApp and Facebook Messenger, allowing seamless customer engagement across popular channels. The platform is built for efficiency, offering instant responses, real-time booking capabilities, and GDPR-compliant hosting for secure data management. It also provides multilingual support, ensuring businesses can reach diverse audiences worldwide. Chathero stands out by combining AI-driven personalization with sales and lead automation, making it useful for e-commerce, healthcare, and service industries. Its drag-and-drop design tools make setup simple, while ChatGPT integration brings advanced conversational capabilities. Although Chathero is powerful, some users may prefer exploring similar tools that fit their unique needs. This is why it’s valuable to compare features and explore alternatives to Chathero.

Chatform

Chatform is an AI-powered no-code chatbot builder designed for rapid deployment and customer support automation. Unlike traditional solutions, it creates live bots in under 60 seconds, making it especially attractive to businesses in the fast-paced online gaming industry. Its strength lies in human-like reasoning and empathetic ticket handling, which allows it to manage up to 80 percent of customer inquiries without human intervention. The platform includes multilingual support, sentiment analysis, and seamless integrations with tools like Zapier, making it versatile across different gaming contexts such as console platforms, e-sports, betting apps, and PC gaming. Chatform’s intuitive interface lets users customize workflows, embed bots on websites or apps, and instantly start engaging customers. Its design reduces the burden on CX teams while maintaining high-quality, humanized interactions. While the tool provides a free plan, details of premium pricing are not clearly disclosed, which may be a limitation for larger businesses. For organizations requiring enterprise-grade automation, integrations, or transparent pricing, it is valuable to compare Chatform with alternative chatbot builders.

ChatData

ChatData is an AI-powered analytics tool that makes analyzing spreadsheet data in a conversational format simple and fast. Users can upload CSV or Excel files and type natural language queries, receiving instant insights without the need for complex BI software or manual formulas, thanks to large language models and chart generation AI. The platform summarizes data in real-time, highlights trends, and generates professional charts suitable for presentations. Business owners can quickly understand sales and customer reports, analysts can automate routine reporting, and students can gain rapid insights for projects. After a free trial, paid plans start at $10/month, unlocking unlimited queries and chart exports. The chat-based interface is intuitive, requiring no technical expertise. ChatData is affordable for startups and small teams, though for advanced integrations or handling more complex datasets, exploring alternative tools may be beneficial.

Chat Whisperer

Chat Whisperer is an AI-powered chatbot platform that helps businesses automate customer support, boost engagement, and streamline lead generation. With its no-code chatbot builder and multilingual support, companies can deploy AI chatbots in minutes without technical expertise. The platform’s NLP and GPT integration allow for natural, context-aware conversations that improve customer satisfaction. It is ideal for e-commerce businesses, real estate agencies, customer service teams, and SMEs that need scalable AI solutions to manage inquiries, recommend products, or schedule appointments. Beyond simple chat, Chat Whisperer offers CRM integration, analytics dashboards, and customizable interfaces, making it a complete engagement and sales tool. The platform provides a free trial, while paid plans start at $99/month, with options for professional customization and enterprise-level unlimited interactions. While Chat Whisperer excels in usability and multilingual support, some businesses may prefer alternatives with lower entry pricing or additional omnichannel automation features. Exploring similar platforms like Tidio, ManyChat, or Intercom alongside Chat Whisperer can help identify the best fit for your customer engagement strategy.

Chat Thing

Chat Thing is an AI chatbot creation platform powered by ChatGPT that lets users build custom bots trained on their own data sources such as websites, Notion docs, and uploaded files. Designed for flexibility, it supports deployment across multiple channels like Slack, Discord, WhatsApp, Telegram, and websites—making it easy for businesses to deliver personalized and consistent support wherever their audience engages. The platform is beginner-friendly yet robust, offering analytics dashboards, bot customization, and integrations with tools like Zapier. It also includes safeguards like response guards to reduce AI hallucinations. Chat Thing’s free plan allows users to start with one chatbot and basic limits, while paid plans scale up to enterprise-level features with advanced analytics, unlimited usage, and priority support. While Chat Thing excels in accessibility and multi-channel support, some limitations include the absence of a dedicated mobile app, reliance on higher-tier plans for advanced features, and limited offline capabilities. Businesses comparing chatbot platforms may also want to explore Chat Thing alternatives that focus on deeper CRM integrations or fully transparent pricing models.

Chat Prompt Genius

Chat Prompt Genius is a community-driven platform built to make prompt creation and discovery easier for anyone using AI tools. It provides a massive library of categorized prompts, covering roles, tasks, and creative use cases. Users can save, edit, and share prompts while building personalized collections to improve workflow efficiency. With role-based templates and search-friendly categorization, it ensures better outputs from ChatGPT and other large language models. The platform acts as both a creativity hub and a practical knowledge base for developers, writers, marketers, and AI beginners. Its simple interface focuses on usability, allowing quick navigation through prompts without distractions. Since it is open-access and donation-supported, all features are free without locked tiers. Community contributions continually expand the library, keeping it fresh and relevant. Chat Prompt Genius stands out for its collaborative approach and diverse prompt coverage, making it highly useful for daily AI use. Still, as it lacks mobile apps and direct AI integrations, users may want to explore alternatives that offer advanced workflow tools.

Chaport

Chaport is an all-in-one customer messaging platform designed to help businesses communicate with customers in real time. It offers live chat, chatbots, multi-channel support (including email, Facebook, and Telegram), and a knowledge base feature for customer self-service. Chaport also provides detailed reports, analytics, and cross-device synchronization, making it a powerful tool for teams managing customer inquiries efficiently. Chaport is ideal for a wide range of users, from startups looking to improve engagement without high costs to large enterprises needing to scale their support teams. E-commerce businesses will particularly benefit, as Chaport’s instant messaging features help boost sales and enhance customer satisfaction. With an easy-to-use interface, Chaport allows businesses to manage customer interactions across multiple channels. Key features include automated responses, real-time synchronization, and insightful analytics. The platform also offers automatic chat invitations, making it an excellent solution for streamlining customer support and sales. Chaport’s free plan covers basic features, while paid plans unlock advanced features like integrations and custom branding. If you’re looking for more options, other customer messaging tools may offer similar functionality.

CapCut

CapCut is an all-in-one, AI-powered video editing tool. It enables users to create professional-quality videos effortlessly. This platform caters to content creators, marketers, businesses, and video editors of all skill levels. It offers a wide array of AI-driven tools. CapCut automates complex tasks like background removal and video stabilization. It also provides video upscaling and an extensive library of templates. Key features include an AI video generator, AI text-to-speech, and a slow-motion editor. It supports both mobile and desktop devices, offering creators flexibility. CapCut stands out with its intuitive interface and robust AI features for quick video production. However, other AI video editors like InVideo, DaVinci Resolve, or Adobe Premiere Pro also provide powerful editing capabilities. These alternatives may offer different AI features, template libraries, or integration options. Exploring various platforms can help users find the best video editing tool for their creative and professional needs.

Cangrade

Cangrade is an AI-powered talent intelligence platform designed to help businesses hire smarter, retain employees longer, and build stronger teams. It combines pre-hire assessments, predictive analytics, and workforce development tools to deliver accurate, data-driven talent insights. By analyzing both soft and hard skills, it allows companies to identify top candidates, forecast retention, and reduce the risks of bad hires. Its ethical AI approach ensures fair and bias-free decision-making, supporting diversity and inclusion goals. Cangrade integrates with applicant tracking systems (ATS) and HR platforms, making it easy to fit into existing workflows. The platform also provides video interviewing, structured interview guides, and Jules AI Copilot to assist managers with talent decisions. Ideal for HR teams, recruiters, and business leaders, Cangrade improves efficiency in hiring while supporting long-term employee growth. Though it requires custom pricing and setup, it offers strong ROI through reduced turnover and smarter workforce planning. For small businesses, alternatives with transparent pricing may be worth considering.

Calculator Air

Calculator Air is an AI-powered mobile calculator app designed to handle everyday math tasks as well as complex equations. It features a sleek interface with tools like a scientific calculator, percentage and unit converters, and a camera-based AI math solver. Users can simply take a picture of a math problem, and the app instantly delivers an answer with step-by-step explanations. Ideal for students, educators, and anyone looking for a fast and intuitive calculation tool, Calculator Air combines accuracy and speed through its AI-driven capabilities and interactive layout. With its user-friendly, mobile-optimized design, Calculator Air allows users to solve equations, perform conversions, and more. The app supports real-time analysis and OCR (Optical Character Recognition) for scanned math problems, enhancing user experience. Calculator Air is a freemium app with optional in-app purchases. While it can be downloaded for free, full access to AI-solving and premium tools requires a paid subscription. Premium plans start at $7.99 per week, $14.99 per month, and $69.99–$99.99 per year.

Bulk Content AI

Bulk Content AI is an automation-focused writing platform built to generate SEO-ready articles, product descriptions, and posts at scale. It removes the manual effort of producing repetitive content by letting users upload CSV files with keywords, topics, or product data and then generating export-ready text in minutes. The platform is designed for digital marketers, online sellers, and agencies that need high-volume content without losing search optimization. With built-in keyword optimization tools, customizable templates, and export options in DOCX, TXT, or CSV, teams can quickly prepare content for blogs, e-commerce catalogs, and social media campaigns. One of Bulk Content AI’s standout features is its bulk generation capability, which drastically cuts content timelines from weeks to hours. While some manual editing may still be required for creative polish, the tool excels in speed, consistency, and scalability. Whether you are producing hundreds of product listings or maintaining multiple niche blogs, Bulk Content AI delivers a reliable workflow. Still, it’s worth comparing it with alternative AI writing tools to ensure you find the best fit for your needs.