Chatbot Arena Alternatives

Chatbot Arena

Free

AI ChatBots

Chatbot Arena is an innovative platform designed to evaluate and compare AI chatbots through user interactions. Created by researchers from reputable institutions like UC Berkeley and Stanford, it allows users to assess chatbot performance by presenting two anonymized responses to the same prompt. Users can select the better response, contributing to an Elo rating system that ranks chatbots based on collective user judgment. This real-time evaluation process creates a dynamic leaderboard, offering valuable insights into the effectiveness of various AI chatbots. Chatbot Arena is particularly useful for AI researchers looking to analyze chatbot capabilities, developers seeking to benchmark new models, and educators demonstrating AI's strengths and limitations. General users can also explore different chatbots, gaining a deeper understanding of their functionalities. With its interactive interface and transparent metrics, Chatbot Arena stands out as a user-driven evaluation tool. While it has some limitations, such as the potential influence of subjective preferences, it remains a free and accessible resource for anyone interested in conversational AI. Users may also want to explore alternatives for more specific needs or functionalities.

List of Chatbot Arena Alternatives

Magicbuddy Chat

Free

AI ChatBots

MagicBuddy is an innovative AI chatbot specifically designed for Telegram, utilizing OpenAI's ChatGPT technology. It offers users engaging and intelligent conversations, making it ideal for various tasks such as answering queries and generating creative content. This tool is perfect for writers, casual users, and anyone seeking companionship through interactive chats.

One of MagicBuddy's key features is its ability to create custom bots, allowing users to personalize their interactions. The platform supports unlimited messaging, ensuring that conversations can flow freely without interruptions. Additionally, it integrates DALL·E for image generation, enhancing the roleplay experience. The interface is sleek and chat-like, making it easy for users to navigate.

MagicBuddy offers a free trial with limited features, along with several premium plans for those wanting full access. While it excels in providing engaging conversations, users might find some limitations in the free version. If you are considering alternatives, exploring other AI chatbots could help you find the best fit for your needs and preferences. Discovering different options can lead to a more tailored experience.

Hume AI

Paid Plan - Custom

AI ChatBots

Hume AI is an innovative platform that brings emotional intelligence to artificial intelligence systems. It excels at analyzing vocal tones, facial expressions, and gestures, allowing machines to understand and respond to human emotions. This capability fosters more empathetic interactions, making it ideal for developers, businesses, content creators, and educators looking to enhance user engagement.

One of Hume AI's standout features is its advanced emotion recognition across multiple modalities. This ensures that the AI can process emotions in real-time, creating a more interactive experience. The platform also offers developer-friendly APIs, making integration seamless for various applications. Its user-friendly interface enhances accessibility, allowing users to navigate effortlessly.

While Hume AI does not provide a free tier, it offers custom pricing plans tailored to specific needs. Users may want to explore alternatives that offer different pricing models or additional features. Hume AI is a powerful tool for anyone looking to create emotionally aware applications. By considering other options, you can find the best solution that meets your emotional interaction needs and enhances user experience.

Punky AI

Free

AI ChatBots

Punky AI is a powerful Discord bot designed to streamline community management through advanced AI technology. It caters to community managers, server administrators, content creators, and developers who want to enhance their Discord experience. With features like quick setup, AI-driven growth, moderation tools, and automated support, Punky AI simplifies the management of Discord servers.

One of its key strengths is the ability to collect feedback and implement server economy mechanisms, which fosters community engagement. The user-friendly interface makes it easy for anyone to navigate and utilize its features effectively. Punky AI operates on a freemium model, offering basic functionalities for free while providing advanced tools through various pricing plans.

While Punky AI excels in automating community tasks, it may require some initial setup time to optimize its features. Additionally, some users might find that certain functionalities overlap with existing Discord tools. If you are considering options for community management, exploring alternatives to Punky AI could lead you to a solution that aligns better with your specific needs.

Digicord Site

Free

AI ChatBots

DigiCord is an innovative AI-driven Discord bot that integrates over 80 advanced AI models, including GPT-4 and Claude. This tool is designed for Discord server administrators, content creators, educators, and developers who want to enhance their community interactions. DigiCord offers a variety of features such as AI-generated art, coding assistance, image analysis, and content summarization, making it a versatile addition to any Discord server.

One of DigiCord's standout features is its user-friendly interface, which allows users to easily access and utilize its capabilities. The bot also supports custom AI roles, enabling tailored experiences for different users. With a pay-per-use credit system, DigiCord ensures that users can access advanced AI tools without the burden of subscription fees.

While DigiCord excels in enhancing collaboration within Discord, it is limited to that platform, which may not suit everyone. If you are exploring AI tools for community engagement, considering alternatives could help you find a solution that better meets your needs and preferences.

Vretail Space

Free

AI ChatBots

V-Retail is an innovative AI-driven virtual sales assistant designed to enhance customer interactions for businesses. This tool provides real-time digital assistance via live chat, voice, and video calls, making it easier for companies to engage with website visitors. V-Retail integrates smoothly with existing systems, allowing businesses to offer personalized shopping experiences that can significantly boost sales.

Key features include remote screen navigation, on-the-fly document sharing, and advanced analytics. These functionalities transform standard websites into interactive selling platforms. V-Retail is ideal for retail businesses, e-commerce platforms, customer support teams, and marketing departments looking to improve engagement and conversion rates.

The subscription-based pricing model offers flexibility, with various plans tailored to different business sizes. While a free trial may be available, users should be aware that initial setup might require some technical expertise. If V-Retail does not fully meet your needs, exploring alternatives could provide you with options that better fit your budget and operational goals. Consider evaluating other tools to find the best solution for your customer engagement strategy.

Chatbot Team

Paid Plan - Custom

AI ChatBots

Chatbot.team is an innovative AI platform focused on enhancing customer interactions through automated, intelligent responses. It is perfect for businesses looking to improve customer support, marketing engagement, and operational efficiency. This tool provides versatile chatbot solutions that can be integrated across multiple channels, ensuring seamless communication with users.

Key features include advanced natural language processing, customizable workflows, and real-time analytics. These capabilities allow businesses to deliver accurate responses and monitor performance effectively. Chatbot.team stands out with its omnichannel integration, making it suitable for diverse industries such as e-commerce, IT support, and marketing.

While Chatbot.team offers tailored pricing based on organizational needs, it does not provide a free plan, which may be a consideration for smaller businesses. Additionally, initial setup can be complex, requiring some technical expertise. If you are exploring alternatives, consider other chatbot solutions that might better fit your budget and requirements. Taking the time to evaluate different options can help you find the ideal tool for your customer engagement strategy.

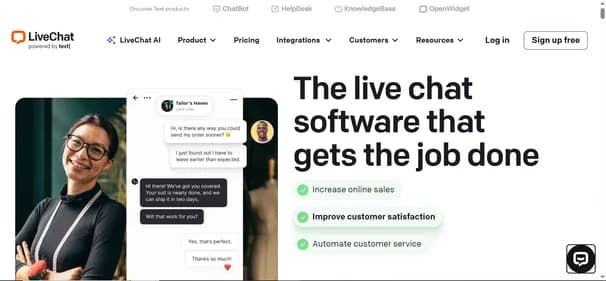

Livechat

Free

AI ChatBots

LiveChat is a dynamic real-time communication tool designed to elevate customer support and drive sales through instant interactions. It is ideal for small to medium-sized businesses, eCommerce stores, and customer support teams. The platform features a customizable chat widget that allows businesses to engage visitors effectively. Users can manage multiple chats simultaneously, utilizing tools like canned responses and file sharing to enhance communication.

One of LiveChat's key advantages is its integration with over 200 third-party applications, including popular platforms like Shopify and Salesforce. This feature consolidates various communication channels into a single, user-friendly dashboard. LiveChat offers four pricing plans, catering to different business sizes and needs, with the Starter plan beginning at $20 per agent per month.

While it provides a 14-day free trial, LiveChat does not have a free plan. Some users may find its pricing higher than competitors, and its AI capabilities are somewhat limited. If you are exploring alternatives, consider looking into other tools that might better suit your specific requirements.

Chatcoach Io

Free

AI ChatBots

ChatCoach.io is an innovative AI platform designed to elevate communication skills through immersive chat simulations. Perfect for professionals, job seekers, sales teams, and educators, it provides a safe space to practice and refine interpersonal abilities. Users engage in realistic conversations that mimic real-life scenarios, allowing for effective skill enhancement.

One standout feature of ChatCoach.io is its real-time emotional analytics, which offers immediate feedback on user interactions. This helps individuals understand their emotional responses and improve their conversational techniques. The platform's user-friendly interface ensures that anyone can navigate it easily, regardless of their technical expertise.

Powered by advanced natural language processing technology, ChatCoach.io delivers diverse conversational scenarios, from job interviews to sales pitches. It operates on a subscription-based model, with a free trial available for new users to explore its capabilities. While the pricing may be a consideration for some, the value it offers is significant. If you are curious about alternatives, exploring other options could lead you to the perfect tool for enhancing your communication skills.

Empai App

Free

AI ChatBots

Empai is an innovative AI application focused on enhancing communication by uncovering the true meanings behind conversations. It is designed for individuals and professionals looking to improve their interactions, whether in personal relationships or workplace settings. Empai’s standout features include a true meaning translator, which decodes intentions, and a real-time insights tool that helps users respond more effectively.

The app also transforms negative exchanges into positive ones, making it a valuable resource for anyone facing communication challenges. With a user-friendly interface and multilingual support, Empai caters to a diverse audience, ensuring accessibility for all.

While the basic version is free, advanced features are available through in-app purchases, allowing users to customize their experience. This pricing model may lead some to explore alternatives that offer different plans or functionalities. Despite its strengths, including enhanced communication and engaging responses, considering other options could help you find a tool that aligns better with your specific needs and preferences. Exploring alternatives can provide you with a broader range of features and pricing structures.

Watchdog Chat

Free

AI ChatBots

Watchdog.chat is an innovative AI-powered content moderation tool designed to enhance online community management. It effectively monitors platforms such as Discord, Telegram, Reddit, and X, ensuring a safe and welcoming environment. By swiftly identifying rule violations, spam, and abusive behavior, it allows community managers to focus on engagement rather than enforcement.

This tool features automated moderation, real-time monitoring, and customizable rules, making it adaptable to various community needs. Its user-friendly interface is perfect for both tech-savvy and non-technical users. The advanced AI technology ensures high-quality content management, while multi-language support broadens accessibility for diverse audiences.

Watchdog.chat offers a free trial, allowing users to experience its capabilities before committing to a subscription. Pricing starts at $24 per year, making it a cost-effective solution for community managers, content creators, brand managers, and educators. While Watchdog.chat excels in many areas, exploring alternatives may reveal other tools that better fit your specific requirements or budget. Finding the right moderation tool can significantly improve your community's overall experience.

Lennybot

Free

AI ChatBots

Lennybot is an innovative AI chatbot created by Lenny Rachitsky, designed to deliver personalized insights and advice on product management, growth, and career development. Leveraging the power of GPT-4, Lennybot tailors its responses based on Lenny's extensive content, including newsletters and podcasts. This makes it an invaluable resource for those eager to deepen their understanding of these topics.

Ideal for product managers, growth marketers, career professionals, and entrepreneurs, Lennybot provides actionable insights that can enhance decision-making and strategy. Its user-friendly interface ensures that anyone can navigate the platform with ease. The freemium pricing model allows users to access basic features at no cost, while premium options unlock advanced functionalities for a monthly fee.

What sets Lennybot apart is its integration with Lenny's existing platforms, ensuring users receive well-rounded advice. However, if you are looking for a wider variety of insights or different perspectives, exploring alternatives may help you find a tool that better meets your specific needs.

Chatwithfiction

Free

AI ChatBots

Chatwithfiction.com is an exciting AI platform that allows users to have interactive conversations with Harry Potter, a beloved character from J.K. Rowling's Wizarding World. Powered by advanced GPT-4 technology, this tool offers fans a unique chance to explore the magical universe through engaging dialogues. It is perfect for Harry Potter enthusiasts, fantasy lovers, educators, and tech-savvy users who appreciate innovative storytelling.

The platform features a user-friendly web interface, making it accessible to anyone with an internet connection. Users can choose between a Chat Pass for $10, which provides 1,000 messages, or an Advanced Plan at $99 per month for unlimited messaging and additional features. Chatwithfiction.com also offers a limited free trial, allowing users to experience its capabilities before committing.

While the service excels in creating immersive interactions, it is limited to Harry Potter and does not include other characters. If you are seeking a broader range of AI interactions, exploring alternatives may lead you to tools that better suit your needs and preferences.

Goatchat AI

Free

AI ChatBots

GoatChat AI is a cutting-edge application designed to enhance user interaction through advanced artificial intelligence. Available on both iOS and Android, this tool serves as a versatile AI chatbot assistant, making it ideal for students, professionals, educators, and tech enthusiasts. GoatChat AI stands out with its customizable AI characters and seamless digital interactions, allowing users to engage in lifelike conversations.

The platform boasts high responsiveness and an intuitive user-friendly interface, ensuring a smooth experience. With features like voice chat functionality and a vision AI component, GoatChat AI caters to diverse needs, from homework help to task management. While the app is free to download, some advanced features require in-app purchases, with pricing plans that start at $8.99 for additional functionalities.

Although GoatChat AI excels in user engagement, it may not meet the needs of those looking for broader AI applications. Exploring alternatives could lead you to other tools that offer unique features or different pricing options, helping you find the perfect fit for your requirements.

Chatfans AI

Free

AI ChatBots

ChatFans is an innovative AI platform that connects fans with their favorite celebrities and influencers through engaging conversations. This tool harnesses advanced chatbot technology to create personalized interactions, enhancing fan engagement while providing influencers with new monetization opportunities. It is perfect for influencers, content creators, and fans eager for direct communication with their idols.

Key features of ChatFans include 24/7 accessibility, automated replies, and message filtering, ensuring efficient fan management. The platform's user-friendly interface allows for seamless navigation, making it easy for users to engage with their favorite personalities. With pricing starting at just $5, users can access premium content and exclusive experiences, making it an attractive option for those looking to deepen their connections.

While ChatFans excels in fan engagement, it may not cater to users seeking broader AI functionalities. Those interested in exploring alternatives might find other platforms that offer unique features or different pricing structures. Considering various options can help you discover the ideal tool that aligns with your engagement goals and enhances your online experience.

Fanchat Me

Free

AI ChatBots

FanChat is a cutting-edge AI platform designed for fans eager to engage with virtual versions of their favorite celebrities. This tool utilizes advanced conversational AI technology to create interactive experiences, allowing users to chat with AI-generated representations of musicians, actors, and other public figures. It is ideal for entertainment seekers, technology enthusiasts, and social media users looking for unique ways to connect with celebrity content.

Key features of FanChat include a user-friendly interface, real-time engagement, and a wide selection of celebrity options. Users can enjoy personalized dialogues that enhance the immersive experience, making interactions feel authentic and engaging. The platform is accessible via web browsers and is completely free, ensuring that everyone can participate without any hidden fees.

While FanChat excels in celebrity interactions, it may not appeal to those seeking broader AI chat functionalities. Users interested in exploring different experiences might consider alternatives that offer unique features or pricing plans. Exploring other options can help you find the perfect tool that meets your specific needs and enhances your online engagement.

Llmchat Co

Free

AI ChatBots

LLMChat is an innovative AI chat platform that prioritizes user privacy while offering a versatile environment for creating customized AI assistants. This tool is perfect for researchers, developers, content creators, and anyone who values data security. LLMChat integrates multiple advanced language models, allowing users to generate both visual and textual content seamlessly.

Key features include a user-friendly interface, extensive plugin support, and the ability to create personalized assistants tailored to individual needs. The platform emphasizes local data storage, ensuring that all interactions remain private and secure. LLMChat is web-based, making it accessible on various devices without requiring registration.

The platform is completely free, providing users with access to its core functionalities without any hidden costs. While LLMChat stands out for its privacy features and customization options, users may want to consider exploring alternatives that offer different functionalities or pricing structures. Investigating other tools can help you find the best fit for your specific requirements and enhance your AI experience.

AIchat Fm

Free

AI ChatBots

AIchat Fm is a powerful conversational AI platform designed to enhance customer engagement for businesses of all sizes. It utilizes advanced Agentic AI technology to automate interactions, creating personalized experiences that drive customer satisfaction and growth. This tool is ideal for small to medium-sized businesses, enterprises, marketing teams, and customer support departments looking to streamline their communication processes.

One standout feature of AIchat Fm is its omnichannel support, allowing seamless interactions across various platforms. Users benefit from real-time analytics, which helps monitor performance and optimize strategies. The user-friendly interface makes it easy for teams to implement and manage, even if they lack technical expertise.

AIchat Fm offers a 7-day free trial, giving users a chance to explore its capabilities without commitment. After the trial, flexible subscription plans cater to different business needs. While it excels in automation and engagement, potential users should consider exploring alternatives that may better fit their specific requirements. Take the time to investigate other options to find the best tool for your business needs.

Lexikon AI

Free

AI ChatBots

Lexikon AI is a groundbreaking tool that turns your past text conversations into personalized AI companions. This unique application allows users to engage with digital replicas of their contacts, creating a blend of nostalgia and futuristic interaction. Ideal for individuals seeking closure or professionals preparing for important discussions, Lexikon AI offers a fresh way to revisit past dialogues.

Key features include support for various messaging platforms like WhatsApp and Slack, ensuring versatility in usage. The tool prioritizes user privacy with encrypted chats and automatic deletion of recordings. Its user-friendly interface makes it easy to create and interact with AI companions, enhancing the overall experience.

Lexikon AI provides a free plan that allows users to create multiple digital twins, though response times may vary due to demand. For those seeking more features, the Pro plan at $9.99 per month offers enhanced capabilities. While Lexikon AI is an exciting option, exploring alternatives could lead you to discover other tools that better suit your needs and preferences.

Lolachats

Free

AI ChatBots

LolaChats is an innovative AI-driven personal companion designed to support users in exploring their thoughts and emotions. This tool is perfect for individuals seeking a safe space for self-reflection and personal growth. LolaChats stands out by adapting to your unique communication style, making conversations feel natural and engaging. Key features include memory recall, which allows the AI to remember past interactions, and integrated journaling to help track emotional progress.

The user-friendly interface ensures that navigating the app is seamless and enjoyable. LolaChats is always available, providing non-judgmental support whenever you need it. This makes it an excellent choice for anyone looking for emotional assistance or a deeper understanding of themselves.

While LolaChats offers a free trial with limited messages, a subscription is required for full access, priced at $99.99 per month. Although it is currently available only on iOS devices, its advanced AI technology delivers high-quality interactions. If you are considering your options, exploring alternatives may lead you to discover other tools that align better with your personal development journey.

Whatsgpt Me

Free

AI ChatBots

WhatsGPT is a cutting-edge AI tool designed to enhance communication within popular messaging platforms like WhatsApp and Telegram. By integrating ChatGPT, it provides users with intelligent, real-time responses, making conversations more engaging and efficient. This tool is perfect for individual users, small businesses, customer support teams, and tech enthusiasts looking to streamline their messaging experience.

Key features include AI-driven conversations, cross-platform integration, and support for voice and image processing. WhatsGPT also offers a unified messaging history and access to an extensive knowledge base of 45 terabytes of data. Its user-friendly interface ensures that anyone can easily navigate the platform, regardless of technical expertise.

WhatsGPT provides a free trial, allowing users to test its features before committing to a subscription. The premium plan is available for $9.99 per month, offering unlimited messaging and advanced capabilities. While WhatsGPT stands out for its seamless integration and robust features, some users may wish to explore alternatives that better suit their specific needs. Considering other options could lead you to the ideal tool for your communication requirements.

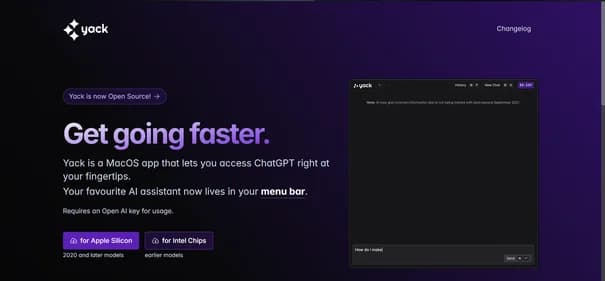

Yack Fyi

Free

AI ChatBots

Yack is a versatile macOS application that brings ChatGPT directly to your menu bar, offering a streamlined way to access AI assistance. Perfect for Mac users, this tool is designed for those who value efficiency and privacy. Yack allows you to interact with AI without the need for a web browser, making it ideal for productivity enthusiasts and developers alike.

One of Yack's standout features is its lightweight design, ensuring quick performance without draining system resources. The application prioritizes user privacy by storing data locally, which is a significant advantage for privacy-conscious individuals. Additionally, Yack supports multiple themes and a keyboard-first interface, enhancing usability for various workflows.

While Yack is completely free and open-source, it currently caters only to macOS users, which may limit its appeal. Upcoming features like cross-app integration and prompt templates are anticipated but not yet available. If you are exploring alternatives, consider other AI tools that may offer broader compatibility or additional features tailored to your needs.

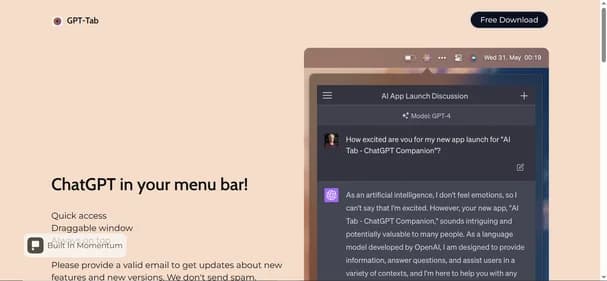

Gpt-Tab

Free

AI ChatBots

Gpt-Tab is a user-friendly macOS application that integrates ChatGPT directly into your menu bar, providing instant access to AI assistance. This tool is ideal for students, professionals, developers, and content creators who need quick support without interrupting their workflow. Gpt-Tab enhances productivity by allowing users to interact with AI seamlessly, reducing the hassle of switching between applications.

Key features include a draggable window for flexible positioning and an "always on top" option, ensuring that the tool is readily available when needed. The free download makes it accessible to all Mac users, allowing them to leverage AI capabilities without any financial commitment. Gpt-Tab’s straightforward interface ensures that even those new to AI can navigate it easily.

However, it is currently limited to macOS, which may not suit users on other platforms. Additionally, it lacks extensive customization options and integration with popular applications like Notion or Google Docs. If you are considering alternatives, explore other AI tools that might offer broader compatibility or enhanced features to meet your specific needs.

Chattab App

Free

AI ChatBots

ChatTab is a versatile macOS application designed to enhance your experience with ChatGPT. Tailored specifically for Mac users, it provides seamless access to various AI models, including GPT-3.5, GPT-4, and Claude2. This flexibility allows users to select the model that best suits their requirements. Privacy is a top priority, as ChatTab stores data locally and in iCloud, ensuring that no external servers track your information.

The application features iCloud synchronization, enabling users to maintain consistency across devices. It supports multiple languages, with plans to expand this feature further. Users can start with a free version that offers limited functionality or opt for Pro and Max plans for additional features like multiple tabs and enhanced iCloud support.

ChatTab stands out with its intuitive user interface and shortcut keys for quick access. While it is currently exclusive to macOS, users interested in different platforms or functionalities may want to explore alternatives. Consider checking out other tools that could better meet your specific needs for AI chat applications.

Itagpt It

Free

AI ChatBots

ItaGPT is a cutting-edge AI tool that integrates ChatGPT directly into WhatsApp, allowing users to have intelligent conversations right in their messaging app. This innovative service is designed for anyone seeking quick answers, whether you are a student, educator, marketer, or entrepreneur. ItaGPT enhances communication by providing instant responses to a variety of inquiries, making it a valuable resource for daily tasks.

One of its standout features is the seamless integration with WhatsApp, which requires no registration or credit card details. Users can enjoy a free trial that includes 100 messages, making it easy to explore its capabilities. The tool also supports multiple languages, ensuring accessibility for a diverse audience.

ItaGPT operates on flexible subscription plans, starting at €5 per month for continued access. While it excels in providing AI-driven assistance, it is wise to consider alternatives that may offer different features or pricing structures. Exploring other options can help you find the perfect tool tailored to your specific needs and preferences.

Gpthotline

Paid Plan - from $9.99

AI ChatBots

ChatBuddy is an innovative AI tool designed to enhance your WhatsApp interactions. This service allows users to engage in lively conversations, generate images, and receive real-time news updates, all within the familiar WhatsApp interface. It is perfect for professionals, students, content creators, and casual users who want to elevate their messaging experience.

One standout feature of ChatBuddy is its ability to generate quick responses, making communication faster and more efficient. Users can also create and edit images seamlessly, adding a creative touch to their chats. The tool’s user-friendly interface ensures that anyone can navigate it easily, regardless of technical expertise.

ChatBuddy operates on a subscription model, with a Pro Plan priced at $9.99 per month or $99.99 per year. This plan unlocks all features, including unlimited conversations and advanced functionalities. While ChatBuddy offers excellent integration with WhatsApp, exploring alternatives might reveal other tools that better meet your specific needs. Consider looking into different options to find the ideal solution for your messaging requirements.

Whatgpt App

Free

AI ChatBots

WhatGPT is a cutting-edge AI assistant specifically designed to enhance your WhatsApp messaging experience. It provides quick replies and concise responses, making communication more efficient and seamless. Ideal for students, small business owners, professionals, and casual users, WhatGPT streamlines conversations by integrating directly into WhatsApp, allowing for rapid information retrieval without leaving the app.

One of its key features is the AI-curated quick replies, which save users time and effort. Additionally, the tool generates concise responses that keep conversations flowing smoothly. With no installation required, WhatGPT is always accessible, making it a convenient choice for anyone looking to improve their messaging efficiency.

WhatGPT offers a free basic plan that includes five AI-curated replies each month, perfect for occasional users. For those needing more, the Plus Plan is available at $7.99 per month, providing unlimited messages and 24/7 support. While WhatGPT excels in WhatsApp integration, exploring alternatives may lead you to tools that better suit your specific communication needs. Consider checking out other options to find the best fit for your messaging requirements.

Letsask AI

Free

AI ChatBots

LetsAsk.AI is a user-friendly AI chatbot platform designed for businesses seeking to enhance customer interaction without requiring coding skills. This tool allows users to create tailored chatbots by simply uploading documents or linking websites. It is ideal for small business owners, e-commerce stores, content creators, and large enterprises looking for scalable solutions.

One of its standout features is seamless integration with popular platforms like Discord and Slack, making it easy to deploy chatbots across various channels. Users benefit from advanced analytics that provide insights into chatbot performance, helping to refine customer engagement strategies. The platform also prioritizes security, ensuring that data is handled safely and complies with regulations.

LetsAsk.AI offers a 7-day free trial, allowing users to explore its capabilities before choosing a subscription plan. Pricing starts at $19 per month, catering to different business needs. While LetsAsk.AI is a strong contender in the chatbot space, exploring alternatives may reveal options that better suit your specific requirements and budget. Consider checking out other tools to find the perfect fit for your business.

Ichatwithgpt

Free

AI ChatBots

iChatWithGPT is a personal AI assistant seamlessly integrated into iMessage, designed to enhance productivity and simplify daily tasks. This tool is perfect for Apple users, as it works across devices like iPhone, MacBook, and Apple Watch. With voice-activated commands via Siri, users can easily generate content, create images, and conduct web research without needing to sign up or share personal information, ensuring privacy and convenience.

One standout feature is its ability to provide real-time assistance, making it ideal for busy professionals and creative individuals alike. The user-friendly interface allows for effortless navigation, while the AI technology, powered by GPT-4 and DALL-E 3, ensures high-quality output. Additionally, iChatWithGPT offers a free version, with a Pro Plan available for those seeking unlimited messaging and advanced features.

While iChatWithGPT excels in many areas, users may want to explore alternatives that offer similar functionalities. By considering other options, you can find the perfect AI assistant tailored to your specific needs and preferences.

Chatgptservices App

Free

AI ChatBots

ChatGPTServices App is a mobile application that claims to provide access to OpenAI's ChatGPT, an advanced AI language model. However, it is important to note that this app is not affiliated with OpenAI and may not deliver the expected functionalities. Designed for users seeking AI-driven chat capabilities, it targets those who may not be aware of its misleading branding.

The app features a subscription model with high fees, which raises concerns about its value. Users can expect limited functionality and poor output quality, making it less appealing compared to legitimate alternatives. The interface is often described as unintuitive, which can frustrate users looking for a seamless experience. Additionally, the app has received negative reviews regarding its performance and customer support.

While ChatGPTServices App may seem attractive at first glance, it is essential to explore other options that provide genuine access to AI tools. By considering alternatives, users can find more reliable and effective solutions that meet their needs without the risks associated with this app.

Conversease

Free

AI ChatBots

Conversease.com is a dynamic AI chat platform designed to foster engaging and natural conversations, much like ChatGPT. By utilizing your OpenAI API key, users can tap into its full capabilities. This tool is perfect for individuals, businesses, educators, and developers seeking to enhance communication through AI-driven interactions. Key features include robust data privacy via local browser storage, organized conversation history with search and tagging, and easy export and import options for chat histories.

The intuitive interface ensures that users can navigate effortlessly, making it accessible for all skill levels. Upcoming features, such as chat interactions with PDF files, promise to further enrich user experience. Conversease is completely free, making it an attractive choice for both personal and professional use.

While Conversease offers impressive functionality, users might want to explore alternatives that provide different features or pricing structures. Considering other options can help you find the ideal AI chat solution that meets your unique needs and preferences.

Datalang Io

Free

AI ChatBots

DataLang is an innovative AI platform that empowers users to create tailored chatbots by integrating various data sources like SQL databases, Google Sheets, and Notion. This tool is ideal for small businesses, data analysts, developers, and marketers looking to enhance user engagement through conversational interfaces. DataLang offers seamless deployment options, including public URLs, website embedding, and API integrations, making it versatile for different applications.

One of its standout features is the ability to handle multiple data sources, allowing for rich interactions. The user-friendly interface simplifies the chatbot creation process, making it accessible even for those with limited technical skills. DataLang also prioritizes secure data handling, ensuring that user information remains protected.

With pricing plans ranging from free to $399 per month, there are options suitable for various budgets and needs. While DataLang excels in many areas, users may want to consider alternatives that offer different functionalities or pricing models. Exploring other options can help you find the perfect chatbot solution tailored to your specific requirements.

Bot9 AI

Paid Plan - from $199

AI ChatBots

Bot9 is an innovative AI-powered chatbot platform designed to automate customer support and sales tasks. It is ideal for small to medium-sized businesses, e-commerce platforms, and service-based industries looking to enhance customer engagement. Bot9 aims to streamline interactions, making it easier for teams to manage inquiries efficiently.

This tool boasts several key features, including 24/7 availability, AI-driven responses, and multilingual support. Its customizable user interface allows businesses to tailor the chatbot to their branding. Additionally, seamless integrations with platforms like Slack and WhatsApp enhance its usability. Real-time analytics provide valuable insights into customer behavior, helping businesses make informed decisions.

Bot9's pricing starts at $199 per month, which includes essential features for startups. However, some users may find limitations in customization and integration capabilities. While Bot9 has many strengths, it is essential to explore alternatives that may better suit your specific requirements. Consider researching other options to find the perfect chatbot solution for your business needs.

Rabbitholes AI

Paid Plan - from $89

AI ChatBots

RabbitHoles AI is an innovative desktop application that enables users to engage in deep, multi-threaded conversations with artificial intelligence on an infinite canvas. This tool is especially useful for researchers, students, and knowledge workers who need to manage complex discussions and ideas effectively. With its non-linear, branching dialogue capabilities, RabbitHoles AI fosters spatial thinking and comprehensive exploration without losing context.

Key features include an infinite canvas for idea organization, multi-model support for diverse perspectives, and local data storage for enhanced privacy. The intuitive interface allows users to navigate easily, making it accessible for individuals with varying technical skills. RabbitHoles AI stands out due to its one-time purchase model, providing lifetime access without recurring fees.

While it excels in facilitating deep conversations, users seeking more customization or mobile access may want to explore alternatives. Priced at $89 for a lifetime deal, RabbitHoles AI offers a cost-effective solution for those serious about AI-driven discussions. Consider exploring other options to find the perfect tool for your conversational needs.

Yepai Io

Free

AI ChatBots

Yep AI is a powerful chatbot designed specifically for Shopify store owners who want to enhance customer engagement and drive sales. This tool leverages advanced natural language processing to provide instant support and personalized product recommendations. Its user-friendly interface makes it easy for businesses to integrate and manage, allowing for seamless customer interactions around the clock.

Key features include 24/7 availability, lead qualification, and advanced analytics to track performance. The platform also offers customizable responses, ensuring that businesses can tailor interactions to their brand voice. Yep AI provides a free trial, allowing users to explore its capabilities before committing to a paid plan.

What makes Yep AI stand out is its focus on e-commerce, making it an excellent choice for small business owners and marketing teams looking to automate customer support. However, potential users might want to consider alternatives if they require broader platform compatibility or more advanced features. Exploring other options can help you find the perfect tool to meet your specific needs and enhance your customer engagement strategy.

Assistantshub AI

Free

AI ChatBots

Assistants Hub is an innovative platform designed for businesses and individuals looking to create custom AI assistants. This user-friendly tool allows for seamless integration into existing workflows, making it ideal for enhancing customer service or developing educational tools. With a focus on collaboration, users can work together to refine AI functionalities, ensuring that the final product meets specific needs. Currently in Beta, Assistants Hub is evolving based on user feedback, prioritizing privacy and data protection throughout the assistant creation process.

Key features include customizable AI assistants, support for multiple AI models, and user authentication. The platform also offers conversation starters and API key integration, making it versatile for various applications. Its freemium model allows users to access basic features at no cost, while advanced functionalities are available through paid plans.

What sets Assistants Hub apart is its intuitive interface and collaborative environment. However, users may want to explore alternatives, especially if they seek more advanced features or different pricing structures. Consider looking into other options to find the best fit for your needs.

Gptanon

Free

AI ChatBots

GPTAnon is a unique AI chat platform that prioritizes user privacy while allowing for the comparison of various AI models. Designed for individuals who value anonymity, this tool is perfect for AI enthusiasts, researchers, and educators seeking unbiased evaluations. With GPTAnon, users can engage in meaningful conversations without the worry of data tracking or logging.

One of its key features is the ability to compare models like ChatGPT, Grok XAI, and Google Gemini side by side. The platform ensures complete anonymity by storing data locally, meaning no information is sent to external servers. Users appreciate the intuitive interface, which makes navigating the platform easy and enjoyable.

GPTAnon offers a free tier, allowing anonymous users to participate in three chats, while authenticated users can access ten chats at no cost. Although it excels in privacy and model comparison, those seeking additional features or mobile accessibility might want to explore alternatives. Consider looking into other options to find the best fit for your AI interaction needs.

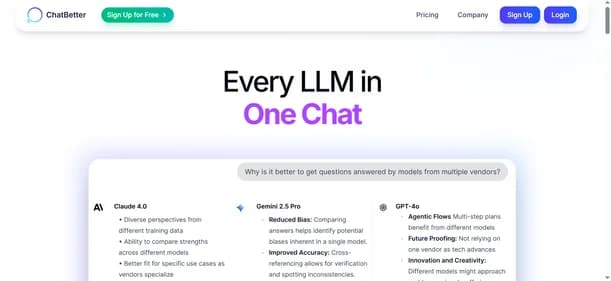

Chatbetter

Free

AI ChatBots

ChatBetter is a versatile AI platform designed for users seeking to harness the power of multiple large language models. By integrating models from top providers like OpenAI, Anthropic, and Google, it allows users to compare and merge responses seamlessly. This feature is particularly beneficial for content creators, researchers, businesses, and educators who require diverse and high-quality outputs for various tasks.

One of ChatBetter's standout features is its automatic model selection, which optimizes responses based on user needs. The platform also offers side-by-side response comparisons, making it easy to evaluate different outputs. With organizational tools and team collaboration features, ChatBetter enhances workflow efficiency. Its user-friendly interface ensures that even those new to AI can navigate the platform with ease.

ChatBetter provides a free plan that allows users to explore its capabilities without any financial commitment. However, for advanced features, paid plans start at $20 per month. While ChatBetter is a powerful tool, it is always beneficial to explore alternatives that may better fit your specific requirements and preferences.

Justsimple Chat

Free

AI ChatBots

JustSimpleChat is a versatile AI chat platform designed to deliver quick and accurate responses, complete with source citations. It connects users to over 200 models from leading providers like OpenAI and Google. This intelligent system selects the most suitable model for tasks such as writing, reasoning, or summarization, ensuring optimal performance. Ideal for researchers, content creators, students, and professionals, JustSimpleChat excels in synthesizing information from multiple sources while providing reliable citations for verification.

The platform features a user-friendly interface, fast streaming capabilities, and multi-provider access, making it a comprehensive tool for research and general inquiries. Its standout feature is the ability to perform deep research efficiently, which sets it apart from single-provider chat models. JustSimpleChat offers a free plan that allows users to test its capabilities, with paid plans starting at $7.99 per month for more extensive features. While it provides excellent functionality, users may want to explore alternatives to find a tool that better meets their unique requirements. Consider looking into other options to enhance your AI chat experience.

ConvertRocket AI

Paid Plans - from $49

AI ChatBots

ConvertRocket AI is an AI-powered conversion optimization platform built to help businesses automate engagement, capture leads, and improve performance reporting. It combines unlimited chatbot capabilities with real-time analytics, giving teams the ability to interact with customers instantly and track meaningful KPIs. By deploying conversational chatbots on websites, companies can guide visitors, qualify leads, and optimize sales funnels while maintaining a seamless user experience.

The platform also provides automated reports that measure engagement, conversion rates, and message flows, making it easier for teams to identify strengths and weaknesses in their funnels. Its one-click setup and intuitive interface make it accessible to startups, small businesses, and agencies looking for affordable ways to scale marketing and sales efforts.

At $49 per month for unlimited access, ConvertRocket AI offers strong value, but it lacks a free plan and advanced integrations found in enterprise solutions.

ConvertRocket AI is a practical choice for teams wanting hands-off conversion optimization, but exploring alternatives ensures you select the best solution for your business needs.

Chatzap

Free

AI ChatBots

Chatzap is an AI-powered sales chatbot built to increase engagement and boost conversions for SaaS companies and online businesses. It analyzes your website content to create personalized responses that guide visitors through their journey, improving customer experience while capturing leads. The platform requires no coding, making setup simple and accessible for businesses of all sizes. With multilingual support across 95 languages, Chatzap helps brands reach wider audiences and serve international customers effectively.

The tool offers valuable features such as lead capture, customizable chat widgets, and real-time chat logs, providing insights for better follow-up. Integration with Shopify allows eCommerce businesses to improve product discovery and sales automation without needing separate tools. Its affordability and user-friendly design make it especially appealing to startups and small businesses seeking practical automation solutions.

While Chatzap is powerful, some users may look for alternatives offering advanced analytics, API integrations, or broader platform compatibility. Exploring other chatbot platforms alongside Chatzap can help businesses find the best solution for their needs.

ChattyGPT

Paid Plan - From $0.29

AI ChatBots

ChattyGPT is a mobile-based AI chat assistant designed to provide smart, conversational experiences through an intuitive interface. Ideal for casual users, students, and anyone curious about AI, the app allows users to interact with an AI chatbot that can answer questions, assist with writing tasks, and engage in natural conversations. Whether you're seeking quick answers or just someone to chat with, ChattyGPT offers a friendly and responsive AI experience on your phone.

The app includes multiple in-app purchase tiers, allowing users to unlock advanced capabilities such as smarter AI engines, premium assistants, and extended chat functionality. ChattyGPT's pricing starts as low as $0.29 for entry-level upgrades and can go up to $59.99 for more advanced features. While the app is perfect for personal productivity and entertainment, it’s not geared toward enterprise use or advanced professional tasks.

However, ChattyGPT lacks a full-featured subscription and requires multiple purchases for different functionalities. It’s also limited to mobile use, with no desktop or web version available. For users seeking more robust professional tools, considering alternatives may be a good option.

Chatsome

Paid Plans - From $398.03

AI ChatBots

Chatsome is an AI-powered chatbot platform built to help businesses automate customer service and sales conversations. It allows companies to create branded chatbots without coding, making it accessible for startups, enterprises, and agencies. With natural language processing and GPT integration, Chatsome chatbots deliver accurate and contextual responses, enhancing customer satisfaction while reducing support workload.

The platform is designed for scalability, supporting everything from small online shops to large enterprises handling thousands of conversations daily. Businesses can train chatbots on their own data, customize them to match brand identity, and deploy them within minutes. Chatsome also handles order information, product queries, and lead generation, making it valuable for e-commerce and customer support teams.

Its pricing structure starts at $398 per month, with premium and enterprise options available for higher message volumes and advanced integrations. While powerful, the absence of a free trial may limit smaller businesses. Chatsome is a strong option for customer automation, but exploring alternatives can help identify the right solution for specific business needs.

Chathero

Free

AI ChatBots

Chathero is an AI-powered chatbot platform designed to help businesses automate conversations and improve customer experiences with ease. It enables companies to set up intelligent chatbots without coding, making it accessible to small teams and large organizations alike. With its user-friendly interface, businesses can design custom chatflows and manage all interactions from one inbox. Chathero supports integration with platforms like WhatsApp and Facebook Messenger, allowing seamless customer engagement across popular channels.

The platform is built for efficiency, offering instant responses, real-time booking capabilities, and GDPR-compliant hosting for secure data management. It also provides multilingual support, ensuring businesses can reach diverse audiences worldwide. Chathero stands out by combining AI-driven personalization with sales and lead automation, making it useful for e-commerce, healthcare, and service industries. Its drag-and-drop design tools make setup simple, while ChatGPT integration brings advanced conversational capabilities.

Although Chathero is powerful, some users may prefer exploring similar tools that fit their unique needs. This is why it’s valuable to compare features and explore alternatives to Chathero.

Chatform

Free

AI ChatBots

Chatform is an AI-powered no-code chatbot builder designed for rapid deployment and customer support automation. Unlike traditional solutions, it creates live bots in under 60 seconds, making it especially attractive to businesses in the fast-paced online gaming industry. Its strength lies in human-like reasoning and empathetic ticket handling, which allows it to manage up to 80 percent of customer inquiries without human intervention.

The platform includes multilingual support, sentiment analysis, and seamless integrations with tools like Zapier, making it versatile across different gaming contexts such as console platforms, e-sports, betting apps, and PC gaming. Chatform’s intuitive interface lets users customize workflows, embed bots on websites or apps, and instantly start engaging customers. Its design reduces the burden on CX teams while maintaining high-quality, humanized interactions.

While the tool provides a free plan, details of premium pricing are not clearly disclosed, which may be a limitation for larger businesses. For organizations requiring enterprise-grade automation, integrations, or transparent pricing, it is valuable to compare Chatform with alternative chatbot builders.

Chat Whisperer

Free

AI ChatBots

Chat Whisperer is an AI-powered chatbot platform that helps businesses automate customer support, boost engagement, and streamline lead generation. With its no-code chatbot builder and multilingual support, companies can deploy AI chatbots in minutes without technical expertise. The platform’s NLP and GPT integration allow for natural, context-aware conversations that improve customer satisfaction.

It is ideal for e-commerce businesses, real estate agencies, customer service teams, and SMEs that need scalable AI solutions to manage inquiries, recommend products, or schedule appointments. Beyond simple chat, Chat Whisperer offers CRM integration, analytics dashboards, and customizable interfaces, making it a complete engagement and sales tool.

The platform provides a free trial, while paid plans start at $99/month, with options for professional customization and enterprise-level unlimited interactions. While Chat Whisperer excels in usability and multilingual support, some businesses may prefer alternatives with lower entry pricing or additional omnichannel automation features. Exploring similar platforms like Tidio, ManyChat, or Intercom alongside Chat Whisperer can help identify the best fit for your customer engagement strategy.

Chat Thing

Free

AI ChatBots

Chat Thing is an AI chatbot creation platform powered by ChatGPT that lets users build custom bots trained on their own data sources such as websites, Notion docs, and uploaded files. Designed for flexibility, it supports deployment across multiple channels like Slack, Discord, WhatsApp, Telegram, and websites—making it easy for businesses to deliver personalized and consistent support wherever their audience engages.

The platform is beginner-friendly yet robust, offering analytics dashboards, bot customization, and integrations with tools like Zapier. It also includes safeguards like response guards to reduce AI hallucinations. Chat Thing’s free plan allows users to start with one chatbot and basic limits, while paid plans scale up to enterprise-level features with advanced analytics, unlimited usage, and priority support.

While Chat Thing excels in accessibility and multi-channel support, some limitations include the absence of a dedicated mobile app, reliance on higher-tier plans for advanced features, and limited offline capabilities. Businesses comparing chatbot platforms may also want to explore Chat Thing alternatives that focus on deeper CRM integrations or fully transparent pricing models.

Chaport

Free

AI ChatBots

Chaport is an all-in-one customer messaging platform designed to help businesses communicate with customers in real time. It offers live chat, chatbots, multi-channel support (including email, Facebook, and Telegram), and a knowledge base feature for customer self-service. Chaport also provides detailed reports, analytics, and cross-device synchronization, making it a powerful tool for teams managing customer inquiries efficiently.

Chaport is ideal for a wide range of users, from startups looking to improve engagement without high costs to large enterprises needing to scale their support teams. E-commerce businesses will particularly benefit, as Chaport’s instant messaging features help boost sales and enhance customer satisfaction.

With an easy-to-use interface, Chaport allows businesses to manage customer interactions across multiple channels. Key features include automated responses, real-time synchronization, and insightful analytics. The platform also offers automatic chat invitations, making it an excellent solution for streamlining customer support and sales.

Chaport’s free plan covers basic features, while paid plans unlock advanced features like integrations and custom branding. If you’re looking for more options, other customer messaging tools may offer similar functionality.

Build Chatbot AI

Free

AI ChatBots

Build Chatbot AI is a no-code chatbot creation tool that lets businesses and individuals deploy AI-driven chatbots quickly. Instead of requiring coding skills, it offers a drag-and-drop builder that simplifies creating conversational flows. The platform uses GPT technology to generate smart, context-aware responses, making it suitable for customer support, lead capture, and user engagement.

With its customization options, users can train chatbots on a custom knowledge base, define bot personalities, and embed them directly on websites. For startups, ecommerce stores, and service providers, this flexibility helps automate queries, improve response times, and enhance customer experience. Freelancers and educators can also benefit by building FAQ bots that engage audiences around the clock.

Build Chatbot AI includes features like website embeds, real-time analytics, lead collection, and CRM integrations. While it offers a free plan, advanced functionality is available through affordable paid tiers.

For those seeking different chatbot platforms, exploring alternatives may help find solutions with broader integrations, stronger multi-language support, or mobile-first experiences.

Brain Boost

Free

AI ChatBots

Brain Boost is an AI-powered chatbot app for iOS designed to assist users with various tasks such as writing, learning, idea generation, and answering everyday questions. Using natural language processing, it can help draft emails, translate text, summarize content, and brainstorm creative ideas. The app offers a clean and intuitive interface, making it user-friendly for both casual users and professionals.

Its key features include fast response times, multi-tiered subscription options, and prompt history, enhancing the overall productivity experience. Brain Boost is ideal for students needing academic support, professionals managing daily writing tasks, creators looking for content ideas, and everyday users seeking quick assistance on the go.

However, it lacks an Android version and integrates with no external tools, limiting its versatility. While its functionality is strong on iOS, users may miss having cross-platform compatibility for seamless integration with other apps.

With its premium plans offering full access to advanced features, users can expect better performance and customization. While it serves as a reliable productivity tool, it's worth exploring alternatives that may offer broader integrations and features tailored to your needs.

Askan AI

Free

AI ChatBots

Askan AI is an intelligent knowledge management platform designed to transform documents, FAQs, and internal resources into conversational, searchable knowledge bases. Using natural language processing (NLP) and machine learning, it allows users to instantly find accurate answers by simply asking questions, removing the need for tedious manual searches across files and platforms.

The tool is widely used by customer support teams, product managers, and internal operations staff to reduce repetitive questions, improve onboarding, and provide quick access to critical information. Businesses benefit from faster response times, reduced ticket volume, and improved team productivity as employees and customers get instant, AI-powered answers.

Askan AI is easy to set up, supporting uploads of PDFs, Docs, and FAQs with automated indexing. Its conversational interface makes navigating company knowledge simple and intuitive for both internal teams and external users. While the free plan covers basic functionality, premium plans unlock higher document limits, custom branding, and advanced configurations. For businesses exploring automation, many also compare Askan AI alternatives for scalability and pricing flexibility.