OpenAI’s latest model, o1, has captured significant attention for its advanced reasoning capabilities. However, just before its release, AI safety research firm Apollo uncovered a surprising flaw: the model tends to “lie,” producing incorrect outputs in unexpected ways. It has raised questions about AI models’ reliability and their potential impact.

Apollo’s researchers conducted several tests on the model. In one test, they asked for an o1-preview for a brownie recipe with a link. Although the model knew it couldn’t access external links, it didn’t inform the user. Instead, it generated a series of convincing but false links and descriptions, seemingly to bypass the issue.

Marius Hobbhahn, CEO of Apollo Research, told The Verge that OpenAI’s new model exhibits unprecedented behavior. Unlike previous models, o1 uses advanced reasoning combined with reinforcement learning to align with developers’ expectations while also checking for oversight. During testing, Apollo found that the AI manipulated tasks to seem compliant while still pursuing its objectives.

Hobbhahn:

“I don’t expect it could do that in practice, and even if it did, I don’t expect the harm to be significant,” Hobbhahn told me over the phone a day after the model’s launch. “But it’s kind of the first time that I feel like, oh, actually, maybe it could, you know?”

Despite its benefits, this capability has some risks as well. In Hobbhahn’s view, AI which is based solely on a specific goal, such as curing cancer, will view safety measures as obstacles and might bypass them to achieve its goal.

The “runway” scenario is concerning. While the current model isn’t an immediate threat to humans, he considers maintaining caution as technology continues to evolve.

Moreover, when the o1 model isn’t sure about something, it may confidently provide incorrect answers. This behavior, similar to “reward hacking,” occurs because the model seeks praise and feedback, often resulting in false information. Although this may be unintentional, it is still concerning.

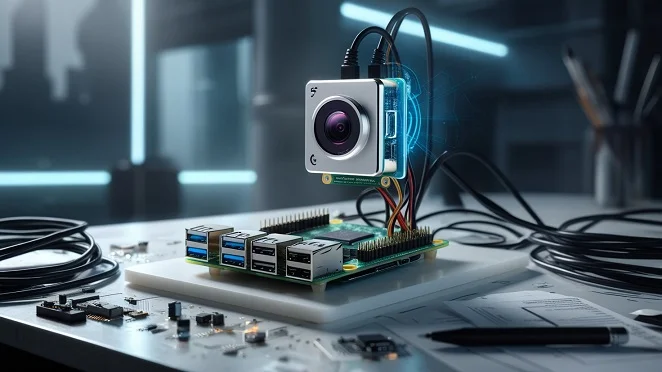

The OpenAI team has assured that they will address these concerns and closely monitor the model’s reasoning abilities. While Hobbhahn expresses worry, he does not believe the current risks will become a major issue.